Chapter 21 Unsupervised Learning

21.1 k-means clustering

21.1.1 Types of machine learning

Unsupervised learning

Finding structure in unlabeled data.Clustering

- Finding homogeneous subgroups within larger group.

Dimensionality reduction

Finding patterns in the features of the data.

Visualization of high dimensional data.

Pre-processing before supervised learning.

Supervised learning

Making predictions based on labeled data.Regression: continous DV

Classification: categorical DV

Reinforcement learning

21.1.2 Introduction to k-means

k-means in R

kmeans(x, centers = num, nstart = num)

x: datacenters: the number of predetermined groups or clustersnstart: run algorithm multiple times to improve odds of the best model.(kmeans algorithm has a random component. A single run of kmeans may not find the optimal solution to kmeans.)

21.1.2.1 k-means clustering

library(tidyverse)

# str(x): num [1:300, 1:2]

x <- read_tsv("data/x_k_means.txt", col_select = -1) %>% as.matrix()

head(x)## [,1] [,2]

## [1,] 3.37 2.00

## [2,] 1.44 2.76

## [3,] 2.36 2.04

## [4,] 2.63 2.74

## [5,] 2.40 1.85

## [6,] 1.89 1.94Plot to see possible centers.

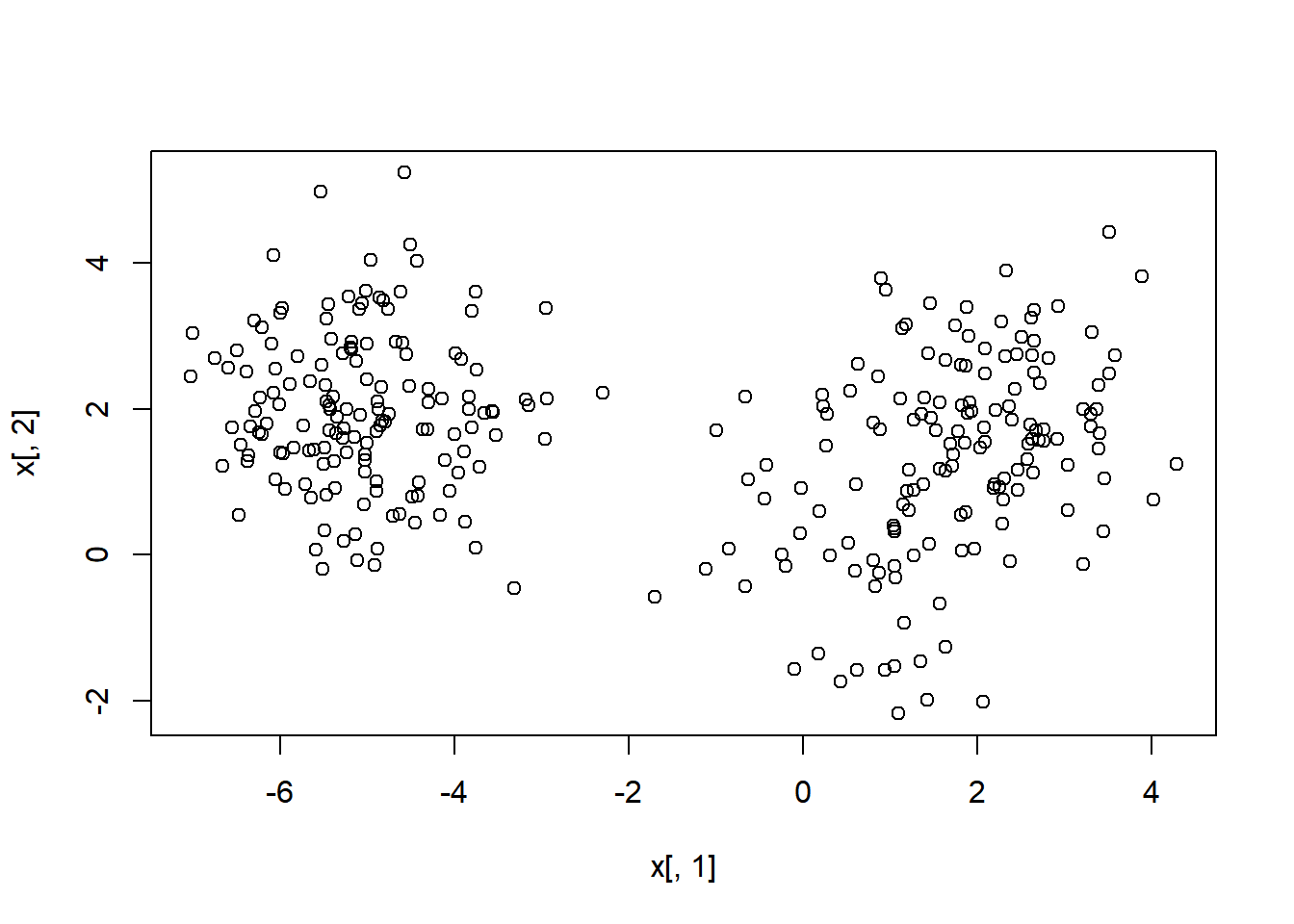

plot(x[,1], x[,2])

# Create the k-means model: km.out

km.out <- kmeans(x, centers = 3, nstart = 20)

# Inspect the result

summary(km.out)## Length Class Mode

## cluster 300 -none- numeric

## centers 6 -none- numeric

## totss 1 -none- numeric

## withinss 3 -none- numeric

## tot.withinss 1 -none- numeric

## betweenss 1 -none- numeric

## size 3 -none- numeric

## iter 1 -none- numeric

## ifault 1 -none- numericAccess the cluster component directly. This is useful anytime you need the cluster membership for each observation of the data used to build the clustering model.

# Print the cluster membership component of the model

km.out$cluster## [1] 1 1 1 1 1 1 1 1 1 1 1 1 3 1 1 1 1 3 3 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 3

## [38] 3 3 1 1 1 1 3 1 1 1 1 1 1 1 1 1 1 1 1 1 1 3 1 1 1 1 1 1 1 1 1 1 1 1 1 1 3

## [75] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 2

## [112] 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

## [149] 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

## [186] 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

## [223] 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 3 3 3 3 1 3 3 3 3

## [260] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 1 1 3 3 1 3 3 3 3 3 3 1 3 3 3 3 3 3 1 3 3

## [297] 3 1 3 3Human friendly output of basic modeling results.

# Print the km.out object

km.out## K-means clustering with 3 clusters of sizes 98, 150, 52

##

## Cluster means:

## [,1] [,2]

## 1 2.217 2.0511

## 2 -5.056 1.9699

## 3 0.664 -0.0913

##

## Clustering vector:

## [1] 1 1 1 1 1 1 1 1 1 1 1 1 3 1 1 1 1 3 3 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 3

## [38] 3 3 1 1 1 1 3 1 1 1 1 1 1 1 1 1 1 1 1 1 1 3 1 1 1 1 1 1 1 1 1 1 1 1 1 1 3

## [75] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 2

## [112] 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

## [149] 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

## [186] 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

## [223] 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 3 3 3 3 1 3 3 3 3

## [260] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 1 1 3 3 1 3 3 3 3 3 3 1 3 3 3 3 3 3 1 3 3

## [297] 3 1 3 3

##

## Within cluster sum of squares by cluster:

## [1] 148.6 295.2 95.5

## (between_SS / total_SS = 87.2 %)

##

## Available components:

##

## [1] "cluster" "centers" "totss" "withinss" "tot.withinss"

## [6] "betweenss" "size" "iter" "ifault"21.1.2.2 Visualizing and interpreting

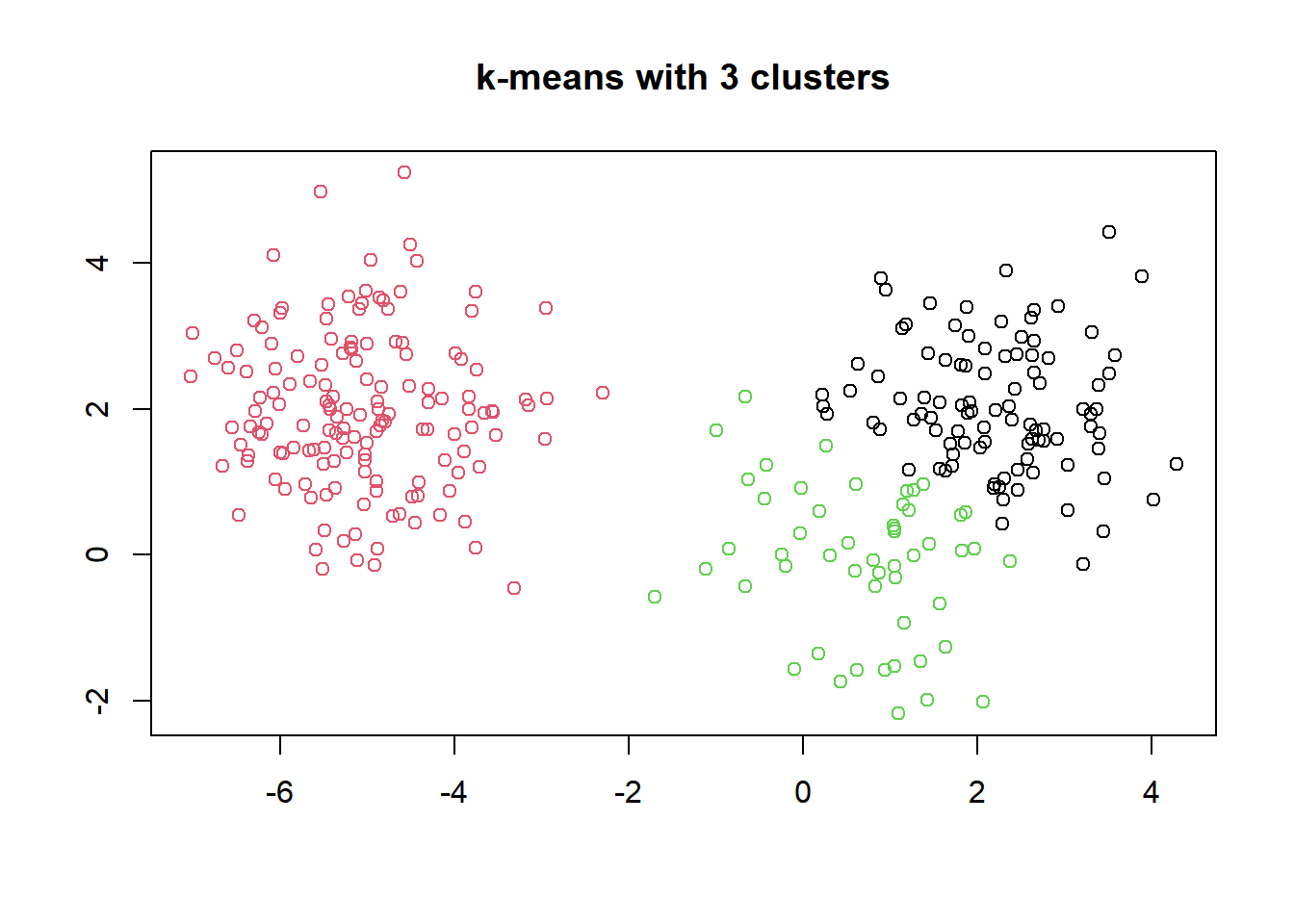

One of the more intuitive ways to interpret the results of k-means models is by plotting the data as a scatter plot and using color to label the samples’ cluster membership (col = km.out$cluster).

# Scatter plot of x

plot(x, col = km.out$cluster,

main = "k-means with 3 clusters",

xlab = "",

ylab = "")

21.1.3 Model selection

Best outcome is based on total within cluster sum of squares:

For each cluster

For each observation in the cluster

- Determine squared distance from observation to cluster center

Sum all of them together

Running algorithm multiple times helps find the global minimum total within cluster sum of squares.

21.1.3.1 Handling random algorithms

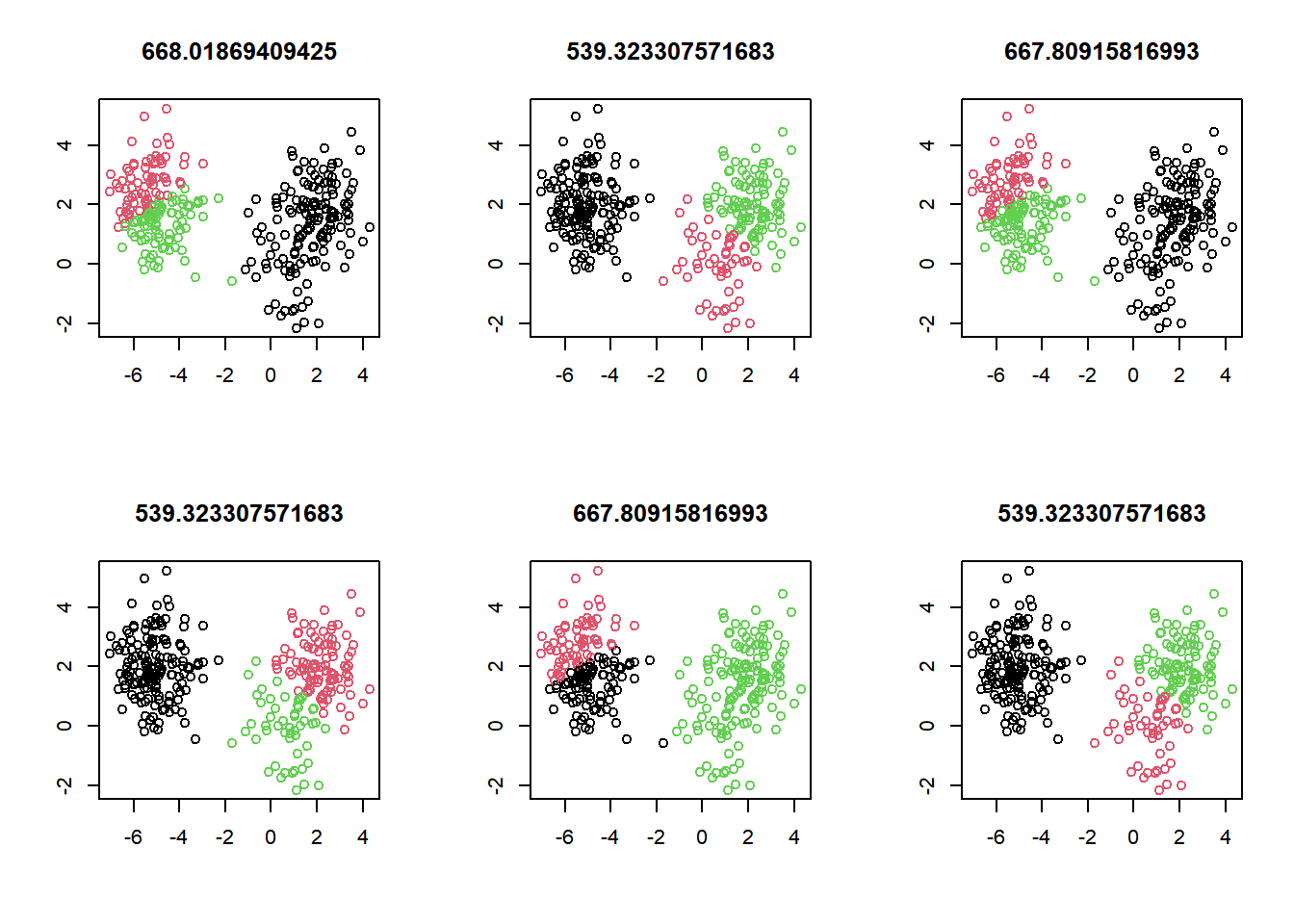

This random initialization can result in assigning observations to different cluster labels. Also, the random initialization can result in finding different local minima for the k-means algorithm.

At the top of each plot, the measure of model quality—total within cluster sum of squares error—will be plotted. Look for the model(s) with the lowest error to find models with the better model results.

Your task is to generate six kmeans() models on the data, plotting the results of each, in order to see the impact of random initializations on model results.

# Set up 2 x 3 plotting grid

par(mfrow = c(2, 3))

# Set seed

set.seed(1)

for(i in 1:6) {

# Run kmeans() on x with three clusters and one start

km.out <- kmeans(x, centers = 3, nstart = 1)

# Plot clusters

plot(x, col = km.out$cluster,

main = km.out$tot.withinss, # total within cluster sum of squares error

xlab = "", ylab = "")

}

Because of the random initialization of the k-means algorithm, there’s quite some variation in cluster assignments among the six models.

21.1.3.2 Selecting number of clusters

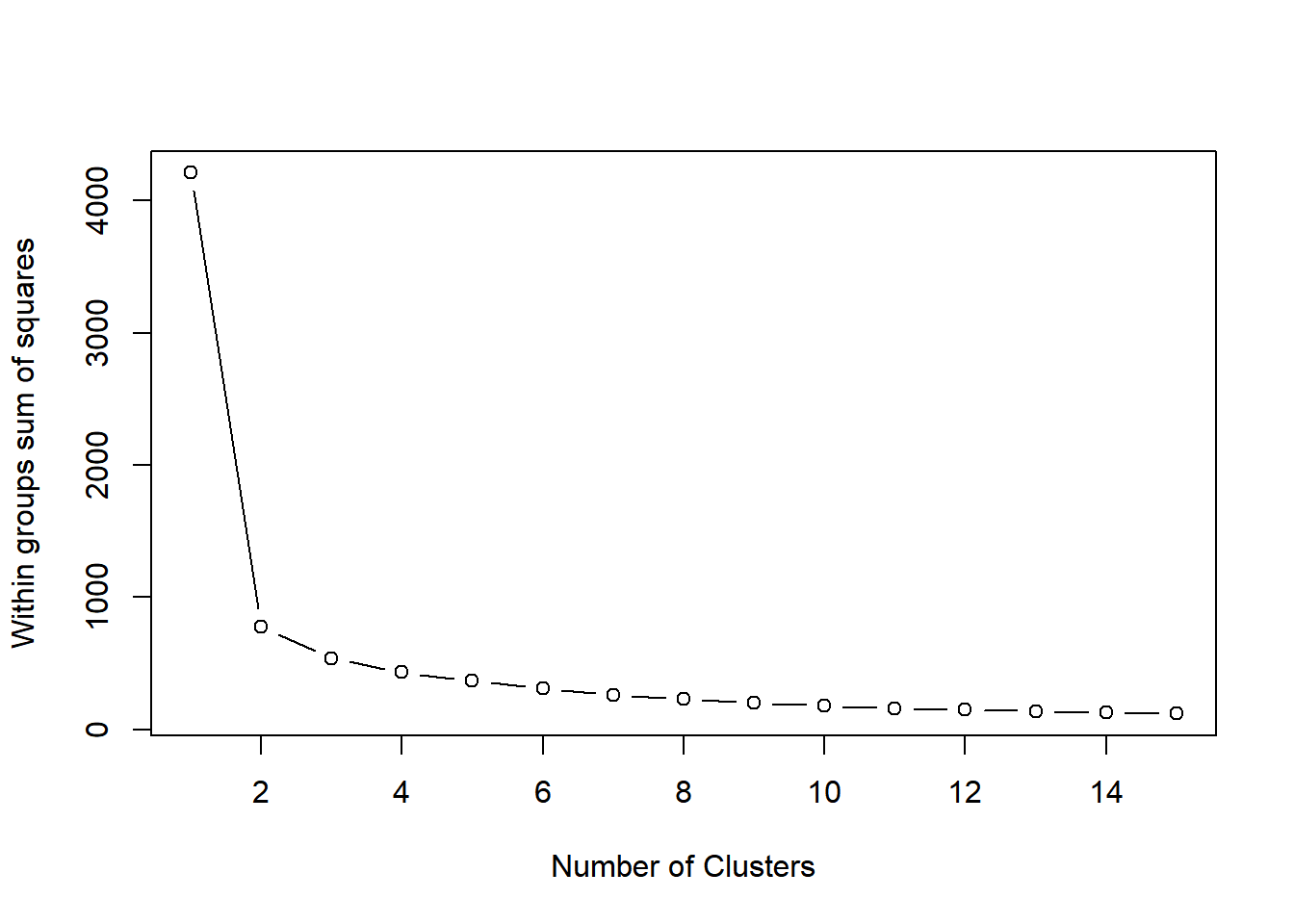

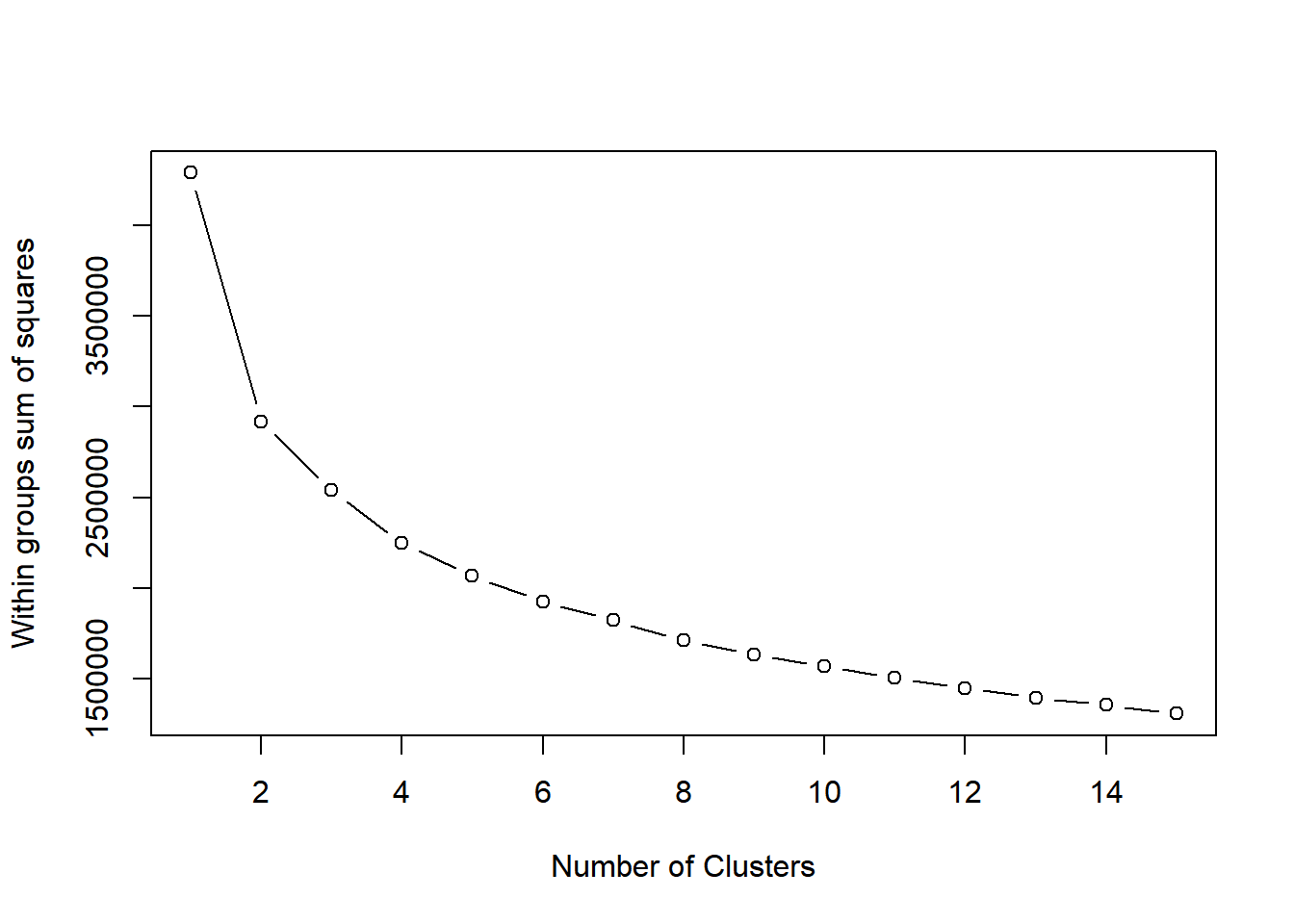

If you do not know the number of clusters and need to determine it, you will need to run the algorithm multiple times, each time with a different number of clusters. From this, you can observe how a measure of model quality changes with the number of clusters.

Plots displaying this information help to determine the number of clusters and are often referred to as scree plots. The elbow indicates the number of clusters inherent in the data.

# Initialize total within sum of squares error: wss

wss <- 0

# For 1 to 15 cluster centers

for (i in 1:15) {

km.out <- kmeans(x, centers = i, nstart = 20)

# Save total within sum of squares to wss variable

wss[i] <- km.out$tot.withinss

}

wss## [1] 4211 777 539 432 369 309 263 230 202 180 161 150 139 129 121# Plot total within sum of squares vs. number of clusters

plot(1:15, wss, type = "b",

xlab = "Number of Clusters",

ylab = "Within groups sum of squares")

# Set k equal to the number of clusters corresponding to the elbow location

k <- 221.1.4 Pokemon data

Data challenges

Selecting the variables to cluster upon

Scaling the data

Determining the number of clusters

Often no clean “elbow” in scree plot

This will be a core part of the exercises

Visualize the results for interpretation

21.1.4.1 Real world data

The first challenge with the Pokemon data is that there is no pre-determined number of clusters. You will determine the appropriate number of clusters, keeping in mind that in real data the elbow in the scree plot might be less of a sharp elbow. Use your judgment on making the determination of the number of clusters.

# convert df to matrix, dim: 800*6

pokemon <- read_csv("data/Pokemon.csv") %>%

select(6:11) %>%

as.matrix()

head(pokemon)## HitPoints Attack Defense SpecialAttack SpecialDefense Speed

## [1,] 45 49 49 65 65 45

## [2,] 60 62 63 80 80 60

## [3,] 80 82 83 100 100 80

## [4,] 80 100 123 122 120 80

## [5,] 39 52 43 60 50 65

## [6,] 58 64 58 80 65 80Find appropriate number of clusters.

# Initialize total within sum of squares error: wss

wss <- 0

# Look over 1 to 15 possible clusters

for (i in 1:15) {

# Fit the model: km.out

km.out <- kmeans(pokemon, centers = i, nstart = 20, iter.max = 50)

# Save the within cluster sum of squares

wss[i] <- km.out$tot.withinss

}

# Produce a scree plot

plot(1:15, wss, type = "b",

xlab = "Number of Clusters",

ylab = "Within groups sum of squares")

# Select number of clusters

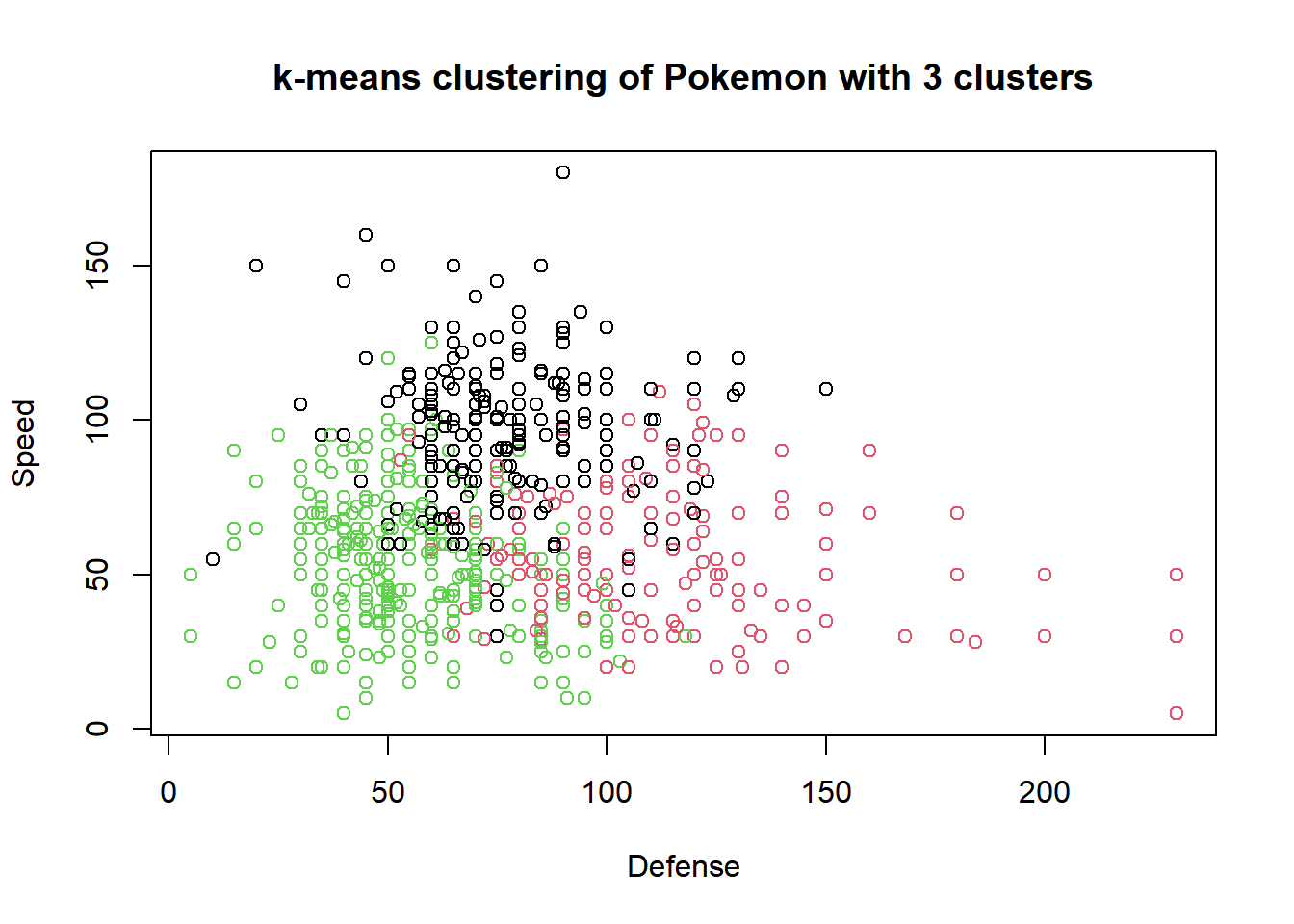

k <- 3

# Build model with k clusters: km.out

km.out <- kmeans(pokemon, centers = k, nstart = 20, iter.max = 50)

# View the resulting model

km.out## K-means clustering with 3 clusters of sizes 270, 175, 355

##

## Cluster means:

## HitPoints Attack Defense SpecialAttack SpecialDefense Speed

## 1 81.9 96.2 77.7 104.1 86.9 94.7

## 2 79.3 97.3 108.9 66.7 87.0 57.3

## 3 54.7 56.9 53.6 52.0 53.0 53.6

##

## Clustering vector:

## [1] 3 3 1 1 3 3 1 1 1 3 3 2 1 3 3 3 3 3 3 1 3 3 1 1 3 3 3 1 3 1 3 1 3 2 3 3 2

## [38] 3 3 1 3 1 3 1 3 3 3 1 3 3 1 3 2 3 1 3 3 3 1 3 1 3 1 3 1 3 3 2 3 1 1 1 3 2

## [75] 2 3 3 1 3 1 3 2 2 3 1 3 2 2 3 1 3 3 1 3 2 3 2 3 2 3 1 1 1 2 3 2 3 2 3 1 3

## [112] 1 3 2 2 2 3 3 2 3 2 3 2 2 2 3 1 3 2 3 1 1 1 1 1 1 2 2 2 3 2 2 2 3 3 1 1 1

## [149] 3 3 2 3 2 1 1 2 1 1 1 3 3 1 1 1 1 1 3 3 2 3 3 1 3 3 2 3 3 3 1 3 3 3 3 1 3

## [186] 1 3 3 3 3 3 3 1 3 3 1 1 2 3 3 2 1 3 3 1 3 3 3 3 3 2 1 2 3 2 1 3 3 1 3 2 3

## [223] 2 2 2 3 2 3 2 2 2 2 2 3 3 2 3 2 3 2 3 3 1 3 1 2 3 1 1 1 3 2 1 1 3 3 2 3 3

## [260] 3 2 1 1 1 2 3 3 2 2 1 1 1 3 3 1 1 3 3 1 1 3 3 2 2 3 3 3 3 3 3 3 3 3 3 3 1

## [297] 3 3 1 3 3 3 2 3 3 1 1 3 3 3 2 3 3 1 3 1 3 3 3 1 3 2 3 2 3 3 3 2 3 2 3 2 2

## [334] 2 3 3 1 3 1 1 3 3 3 3 3 3 2 3 1 1 3 1 3 1 2 2 3 1 3 3 3 1 3 1 3 2 1 1 1 1

## [371] 2 3 2 3 2 3 2 3 2 3 2 3 1 3 2 3 1 1 3 2 2 3 1 1 3 3 1 1 3 3 1 3 2 2 2 3 3

## [408] 2 1 1 3 2 2 1 2 2 2 1 1 1 1 1 1 2 1 1 1 1 1 1 2 1 3 2 2 3 3 1 3 3 1 3 3 1

## [445] 3 3 3 3 3 3 1 3 1 3 2 3 2 3 2 2 2 1 3 2 3 3 1 3 1 3 2 1 3 1 3 1 1 1 1 3 1

## [482] 3 3 1 3 2 3 3 3 3 2 3 3 1 1 3 3 1 1 3 2 3 2 3 1 2 3 1 3 3 1 2 1 1 2 2 2 1

## [519] 1 1 1 2 1 2 1 1 1 1 2 2 1 1 1 1 1 1 1 2 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 1 3

## [556] 3 1 3 3 1 3 3 1 3 3 3 3 2 3 1 3 1 3 1 3 1 3 2 3 3 1 3 1 3 2 2 3 1 3 1 2 2

## [593] 3 2 2 3 3 1 2 2 3 3 1 3 3 1 3 1 3 1 1 3 3 1 3 2 1 1 3 2 3 2 1 3 2 3 2 3 1

## [630] 3 2 3 1 3 1 3 3 2 3 3 1 3 1 3 3 1 3 1 1 3 2 3 2 3 1 2 3 1 3 2 3 2 2 3 3 1

## [667] 3 1 3 3 1 3 2 2 3 2 1 3 1 2 3 1 2 3 2 3 2 2 3 2 3 2 1 2 3 3 1 3 1 1 1 1 1

## [704] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 3 2 2 3 3 1 3 3 1 3 3 3 3 1 3 3 3 3 1 3 3 1

## [741] 3 1 3 2 1 3 1 1 3 2 1 2 3 2 3 1 3 2 3 2 3 2 3 1 3 1 3 2 3 1 1 1 1 2 3 1 1

## [778] 2 3 2 3 3 3 3 2 2 2 2 3 2 3 1 1 1 2 2 1 1 1 1

##

## Within cluster sum of squares by cluster:

## [1] 1018348 709021 812080

## (between_SS / total_SS = 40.8 %)

##

## Available components:

##

## [1] "cluster" "centers" "totss" "withinss" "tot.withinss"

## [6] "betweenss" "size" "iter" "ifault"# Plot of Defense vs. Speed by cluster membership

plot(pokemon[, c("Defense", "Speed")],

col = km.out$cluster,

main = paste("k-means clustering of Pokemon with", k, "clusters"),

xlab = "Defense", ylab = "Speed")

21.2 Hierarchical clustering

21.2.1 Introduction

Hierarchical clustering

Number of clusters is not known ahead of time

Two kinds:

bottom-up: starts by assigning each point to its own cluster. Then find the closest two clusters and to join them together into a single cluster. This process continues iteratively. Until there is only one cluster.

top-down

Hierarchical clustering in R

# Calculates similarity as Euclidean distance

# between observations

dist_matrix <- dist(x)

# Returns hierarchical clustering model

hclust(d = dist_matrix)21.2.1.1 Hierarchical clustering results

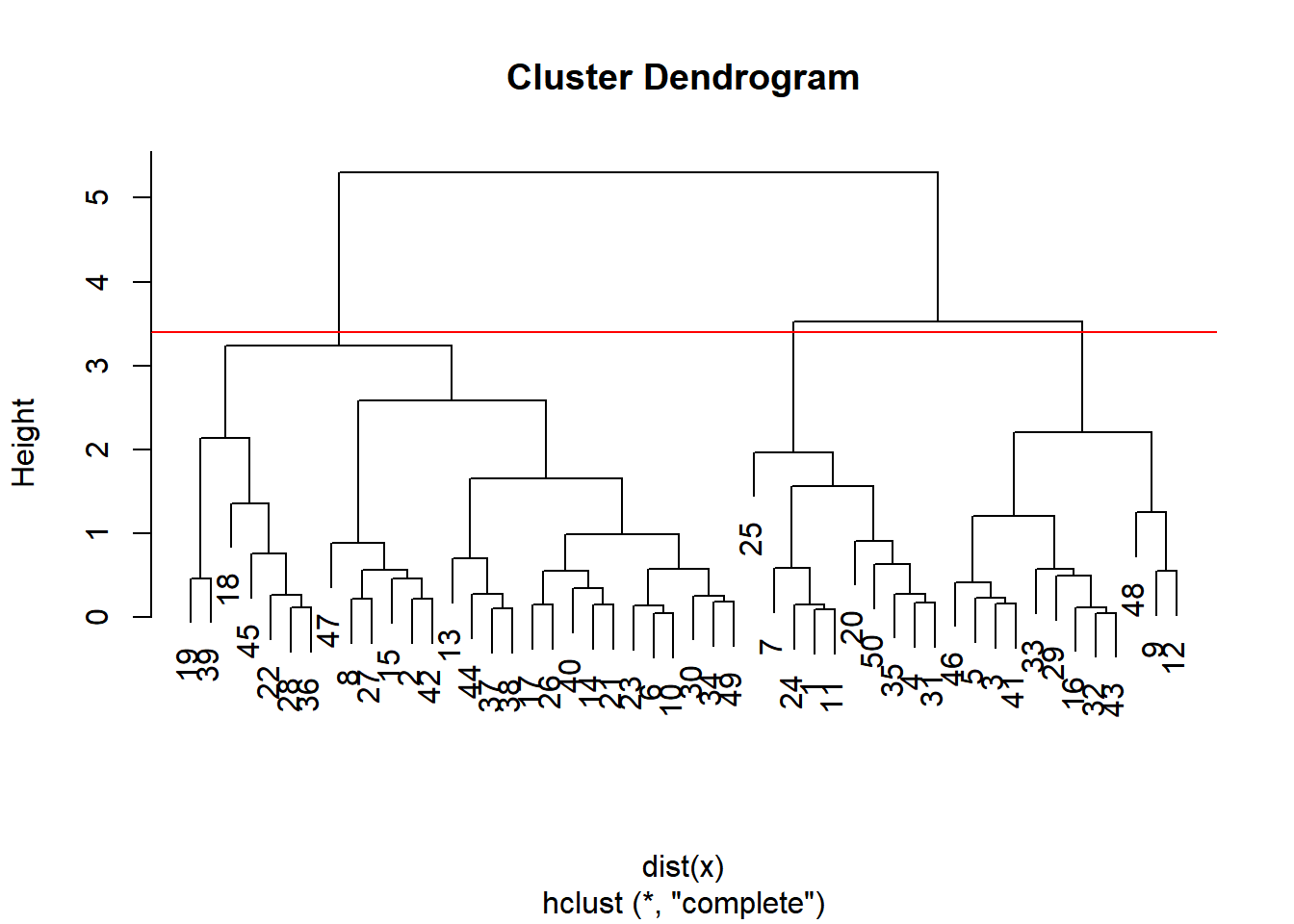

In this exercise, you will create your first hierarchical clustering model using the hclust() function. Your task is to create a hierarchical clustering model of x.

Remember the first step to hierarchical clustering is determining the similarity between observations, which you will do with the dist() function.

# str: num [1:50, 1:2]

x <- x[1:50, ]

head(x)## [,1] [,2]

## [1,] 3.37 2.00

## [2,] 1.44 2.76

## [3,] 2.36 2.04

## [4,] 2.63 2.74

## [5,] 2.40 1.85

## [6,] 1.89 1.94# Create hierarchical clustering model: hclust.out

hclust.out <- hclust(d = dist(x))

# Inspect the result

summary(hclust.out)## Length Class Mode

## merge 98 -none- numeric

## height 49 -none- numeric

## order 50 -none- numeric

## labels 0 -none- NULL

## method 1 -none- character

## call 2 -none- call

## dist.method 1 -none- character21.2.2 Selecting number of clusters

Dendrogram

Tree shaped structure used to interpret hierarchical clustering models.

The distance between the clusters is represented as the height of the horizontal line on the dendrogram.

Dendrogram plotting in R:

# Draws a dendrogram plot(hclust.out) # determine the number of clusters # drawing a cut line at a particular 'height' or 'distance' abline(h = 6, col = "red") # Tree "cutting" # Cut by height h cutree(hclust.out, h = 6) # Cut by number of clusters k cutree(hclust.out, k = 2)- Specifying height of the line,

h, is the equivalent of specifying that you want clusters that are no further apart than that height.

- Specifying height of the line,

21.2.2.1 Cutting the tree

cutree() is the R function that cuts a hierarchical model. The h and k arguments to cutree() allow you to cut the tree based on a certain height h or a certain number of clusters k.

# draw dendrogram

plot(hclust.out)

# cut 3 cluster

abline(h = 3.4, col = "red")

# Cut by height

cutree(hclust.out, h = 3.4)## [1] 1 2 3 1 3 2 1 2 3 2 1 3 2 2 2 3 2 2 2 1 2 2 2 1 1 2 2 2 3 2 1 3 3 2 1 2 2 2

## [39] 2 2 3 2 3 2 2 3 2 3 2 1# Cut by number of clusters

cutree(hclust.out, k = 3)## [1] 1 2 3 1 3 2 1 2 3 2 1 3 2 2 2 3 2 2 2 1 2 2 2 1 1 2 2 2 3 2 1 3 3 2 1 2 2 2

## [39] 2 2 3 2 3 2 2 3 2 3 2 1The output of each cutree() call represents the cluster assignments for each observation in the original dataset.

21.2.3 Clustering linkage

Linking clusters in hierarchical clustering

How is distance between clusters determined? Rules?

Four methods to determine which cluster should be linked:

Complete: pairwise similarity between all observations in cluster 1 and cluster 2, and uses largest of similarities

hclust(d, method = "complete")

Single: same as above but uses smallest of similarities

hclust(d, method = "single")

Average: same as above but uses average of similarities

hclust(d, method = "average")

Centroid: finds centroid of cluster 1 and centroid of cluster 2, and uses similarity between two centroids

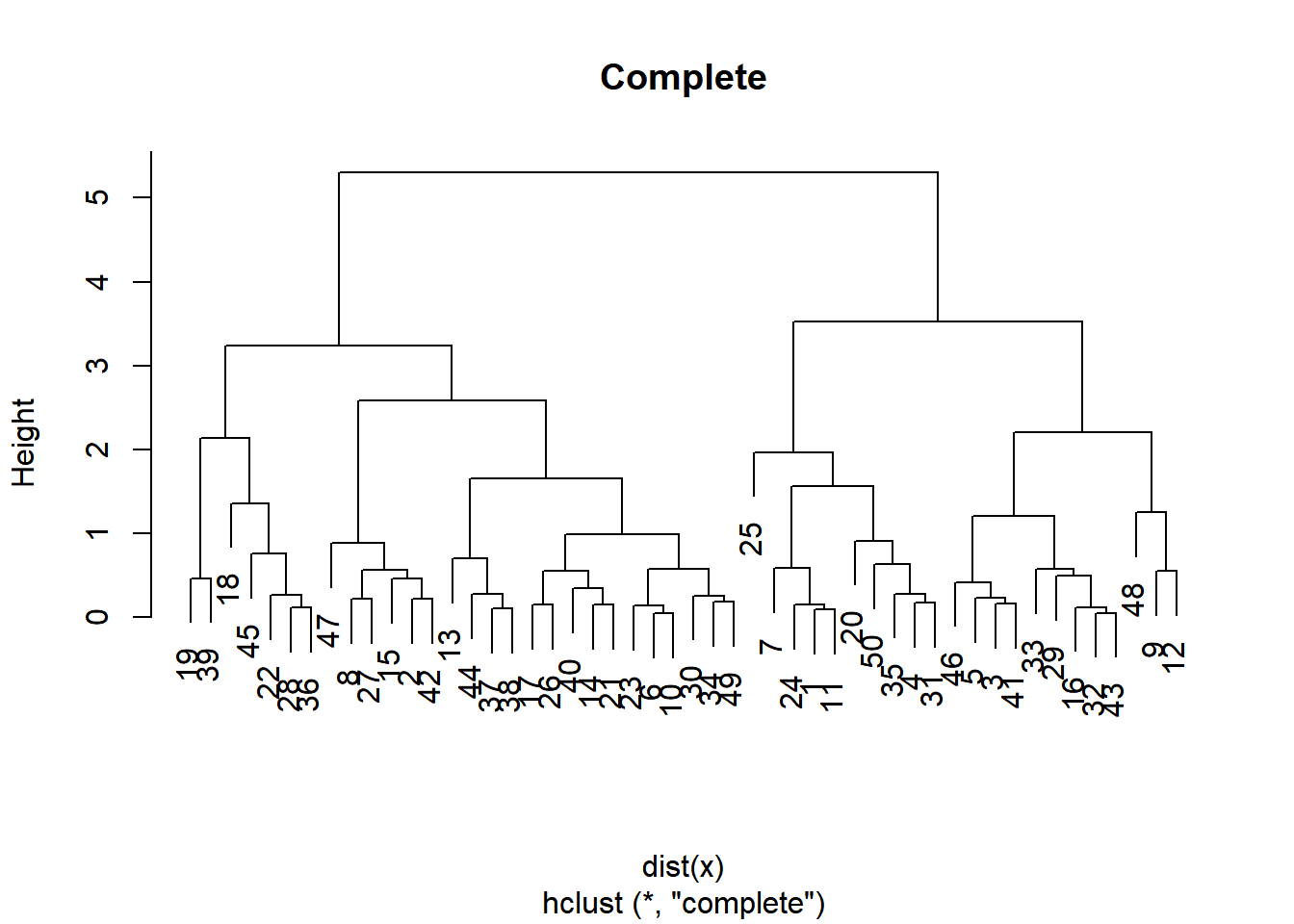

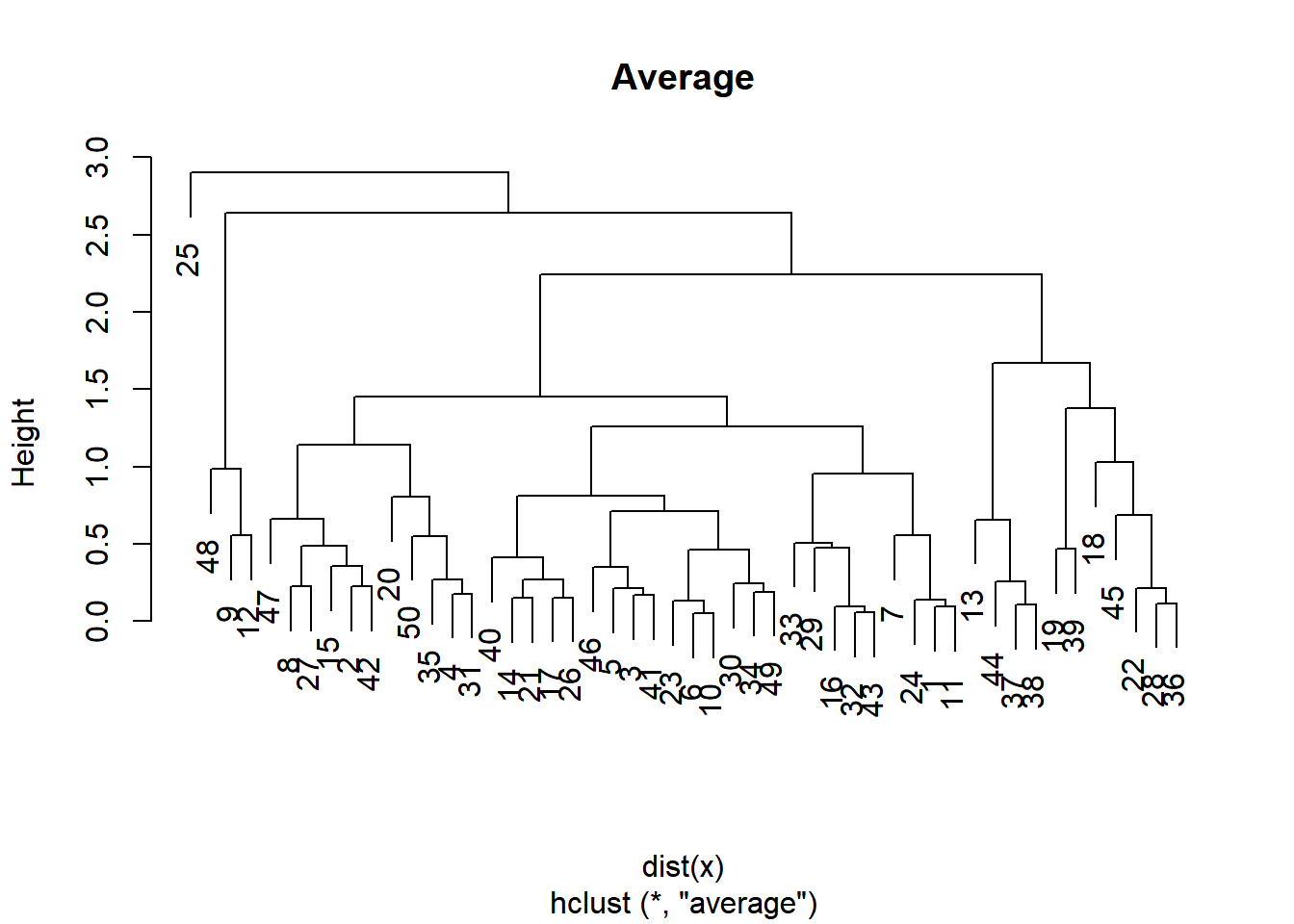

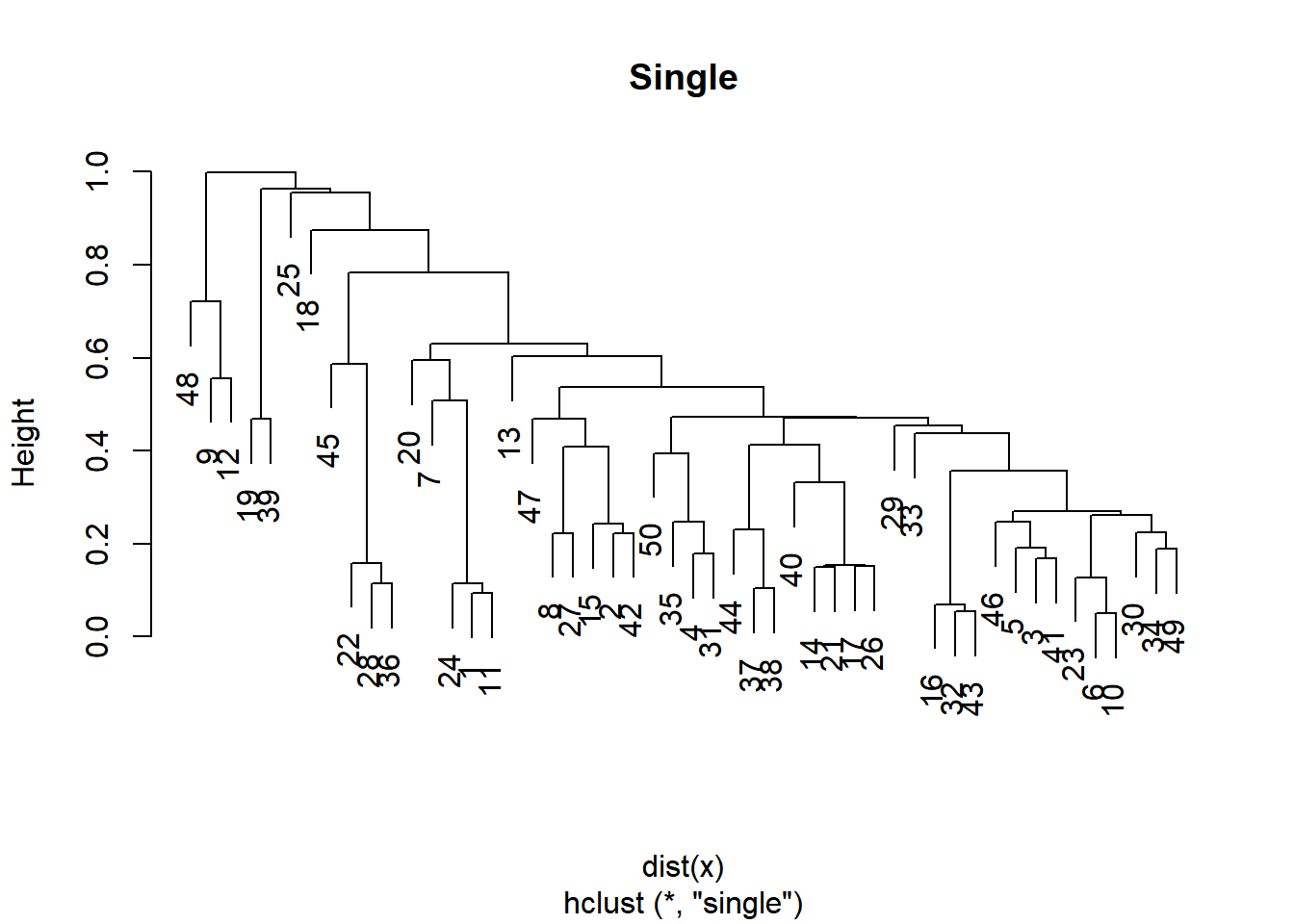

21.2.3.1 Linkage methods

You will produce hierarchical clustering models using different linkages and plot the dendrogram for each, observing the overall structure of the trees.

# Cluster using complete linkage: hclust.complete

hclust.complete <- hclust(dist(x), method = "complete")

# Cluster using average linkage: hclust.average

hclust.average <- hclust(dist(x), method = "average")

# Cluster using single linkage: hclust.single

hclust.single <- hclust(dist(x), method = "single")

# Plot dendrogram of hclust.complete

plot(hclust.complete, main = "Complete")

# Plot dendrogram of hclust.average

plot(hclust.average, main = "Average")

# Plot dendrogram of hclust.single

plot(hclust.single, main = "Single")

Whether you want balanced or unbalanced trees for your hierarchical clustering model depends on the context of the problem you’re trying to solve.

Balanced trees (complete, average) are essential if you want an even number of observations assigned to each cluster.

On the other hand, if you want to detect outliers, for example, an unbalanced tree is more desirable because pruning an unbalanced tree can result in most observations assigned to one cluster and only a few observations assigned to other clusters.

21.2.3.2 Scaling

Clustering real data may require scaling the features if they have different distributions.

- Normalized features: mean = 0, sd = 1

You will observe the pokemon dataset, its distribution (mean and standard deviation) of each feature, scale the data accordingly, then produce a hierarchical clustering model using the complete linkage method.

# View column means

colMeans(pokemon)## HitPoints Attack Defense SpecialAttack SpecialDefense

## 69.3 79.0 73.8 72.8 71.9

## Speed

## 68.3Since the variables are the columns of your matrix, use apply(X, MARGIN, FUN) to calculate sd. Where, x: array or matrix; MARGIN: for a matrix 1 indicates rows, 2 indicates columns.

# View column standard deviations

apply(pokemon, 2, sd)## HitPoints Attack Defense SpecialAttack SpecialDefense

## 25.5 32.5 31.2 32.7 27.8

## Speed

## 29.1Use scale() to normalized.

# Scale the data

pokemon.scaled <- scale(pokemon)

apply(pokemon.scaled, 2, sd)## HitPoints Attack Defense SpecialAttack SpecialDefense

## 1 1 1 1 1

## Speed

## 1# Create hierarchical clustering model: hclust.pokemon

hclust.pokemon <- hclust(dist(pokemon.scaled), method = "complete")

summary(hclust.pokemon)## Length Class Mode

## merge 1598 -none- numeric

## height 799 -none- numeric

## order 800 -none- numeric

## labels 0 -none- NULL

## method 1 -none- character

## call 3 -none- call

## dist.method 1 -none- character21.2.3.3 Compare kmeans() and hclust()

Comparing k-means and hierarchical clustering, you’ll see the two methods produce different cluster memberships. This is because the two algorithms make different assumptions about how the data is generated.

# Apply cutree() to hclust.pokemon: cut.pokemon

cut.pokemon <- cutree(hclust.pokemon, k = 3)

# Compare methods

table(km.out$cluster, cut.pokemon)## cut.pokemon

## 1 2 3

## 1 267 3 0

## 2 171 3 1

## 3 350 5 0It looks like the hierarchical clustering model assigns most of the observations to cluster 1, while the k-means algorithm distributes the observations relatively evenly among all clusters.

It’s important to note that there’s no consensus on which method produces better clusters. The job of the analyst in unsupervised clustering is to observe the cluster assignments and make a judgment call as to which method provides more insights into the data.

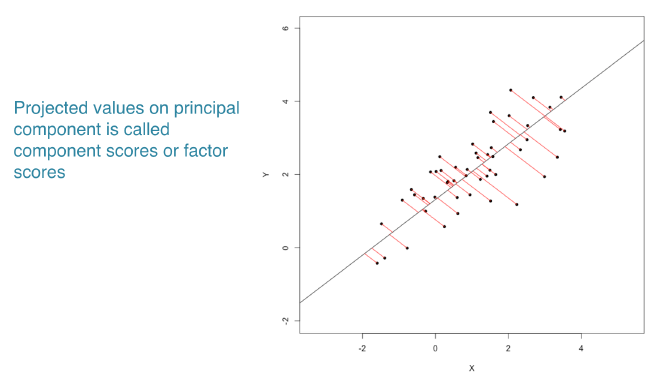

21.3 Dimensionality reduction - PCA

21.3.1 Introduction

Dimensionality reduction

A popular method is principal component analysis (PCA)

Three goals when finding lower dimensional representation of features:

Find linear combination of variables to create principal components

Regression line of x & y represent the principal component

Maintain most variance in the data

Principal components are uncorrelated (i.e., orthogonal to each other)

21.3.1.1 PCA using prcomp()

prcomp(x = data,

scale = FALSE / TRUE,

center = TRUE)Your task is to create a PCA model of the pokemon, which has four dimensions, then to inspect the resulting model.

pokemon <- read_delim("data/pokemon_pca.txt", delim = ",")

rowname <- as.matrix(pokemon)[,1]

pokemon <- data.matrix(pokemon)[,-1]

row.names(pokemon) <- rowname

head(pokemon)## HitPoints Attack Defense Speed

## Stunfisk 109 66 84 32

## Mewtwo 106 110 90 130

## Charmander 39 52 43 65

## Grimer 80 80 50 25

## Roggenrola 55 75 85 15

## Larvesta 55 85 55 60# Perform scaled PCA: pr.out

pr.out <- prcomp(pokemon, scale = TRUE, center = TRUE)

# Inspect model output

summary(pr.out)## Importance of components:

## PC1 PC2 PC3 PC4

## Standard deviation 1.445 0.994 0.845 0.4566

## Proportion of Variance 0.522 0.247 0.179 0.0521

## Cumulative Proportion 0.522 0.769 0.948 1.0000The first two principal components describe around 77% of the variance.

21.3.1.2 Additional results of PCA

PCA models in R produce additional diagnostic and output components:

center: the column means used to center to the data, orFALSEif the data weren’t centeredscale: the column standard deviations used to scale the data, orFALSEif the data weren’t scaledrotation: the directions of the principal component vectors in terms of the original features/variables. This information allows you to define new data in terms of the original principal componentsx: the value of each observation in the original dataset projected to the principal components

You can access these the same as other model components. For example, use pr.out$rotation to access the rotation component.

pr.out$rotation## PC1 PC2 PC3 PC4

## HitPoints -0.487 -0.3198 -0.7256 -0.366

## Attack -0.648 0.0529 0.0276 0.760

## Defense -0.522 -0.2343 0.6829 -0.454

## Speed -0.266 0.9165 -0.0802 -0.288pr.out$center## HitPoints Attack Defense Speed

## 68.4 76.5 73.1 64.2pr.out$scale## HitPoints Attack Defense Speed

## 25.7 28.0 30.1 33.7list(dim = dim(pr.out$x), head = head(pr.out$x))## $dim

## [1] 50 4

##

## $head

## PC1 PC2 PC3 PC4

## Stunfisk -0.461 -1.487 -0.8340 -0.756

## Mewtwo -2.302 1.256 -0.8035 -0.445

## Charmander 1.640 0.576 0.1223 0.198

## Grimer 0.409 -1.025 -0.7545 0.611

## Roggenrola 0.471 -1.268 0.7638 0.390

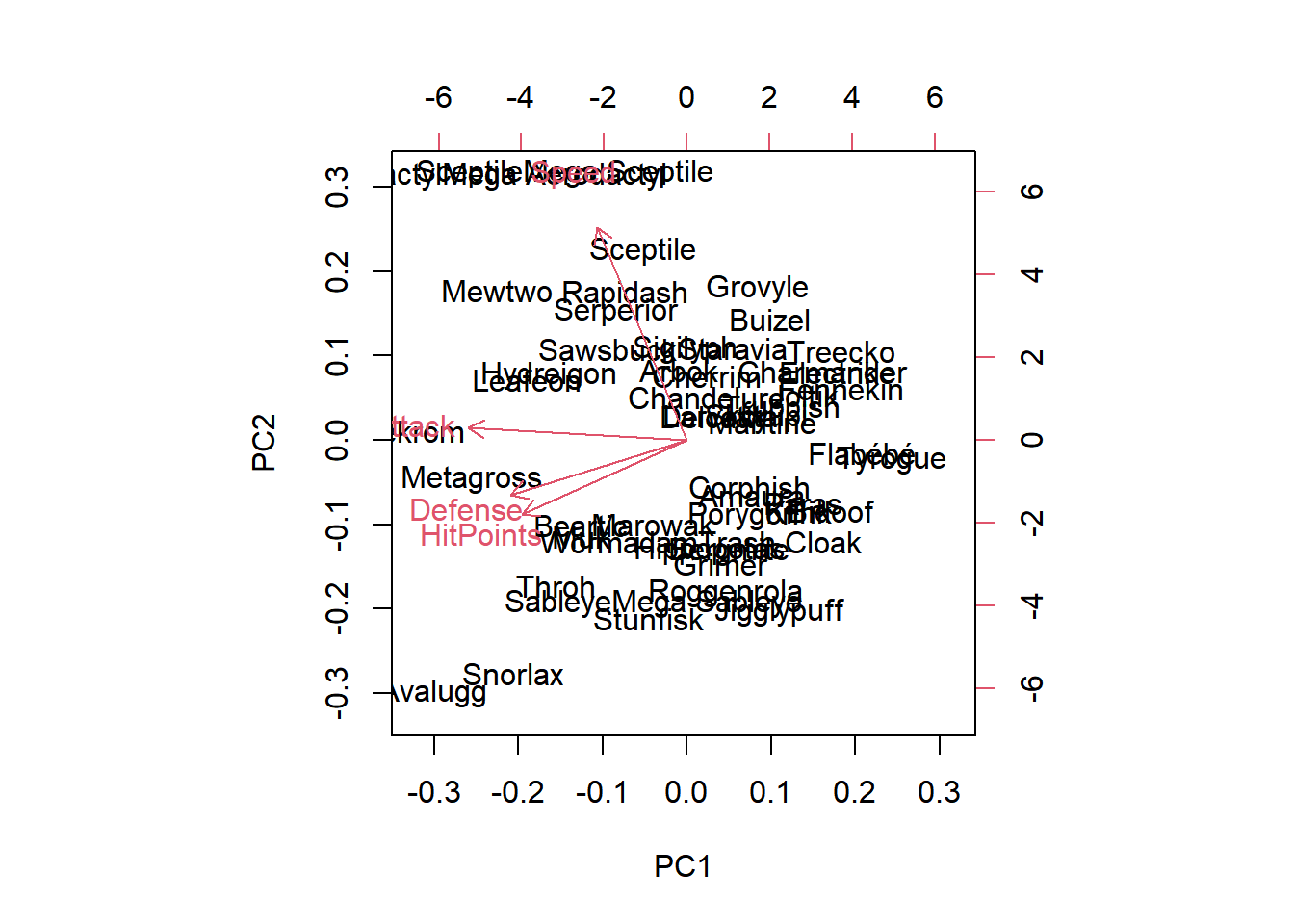

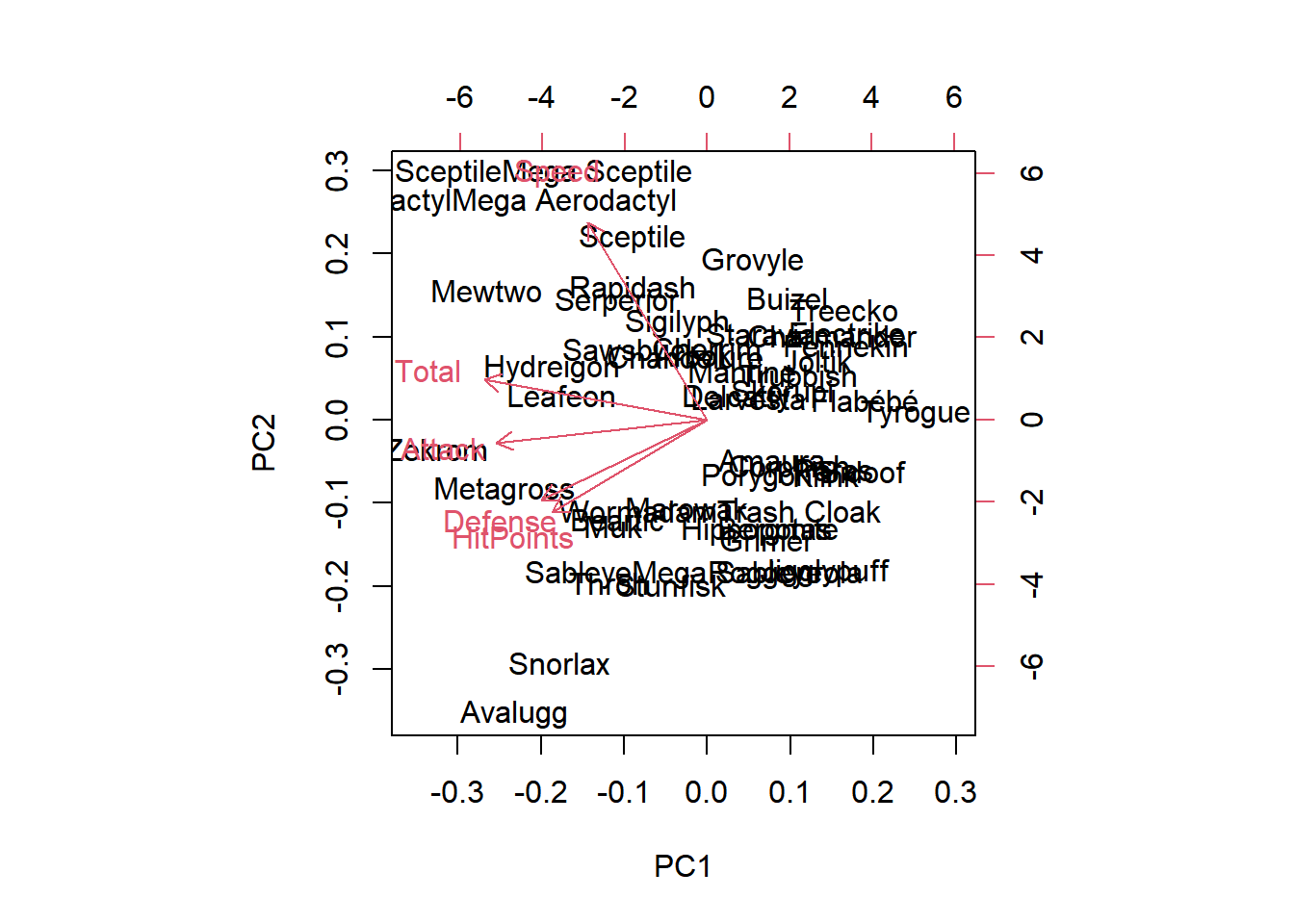

## Larvesta 0.404 0.210 -0.0134 0.72921.3.2 Visualizing and interpreting

- Biplots

biplot(pca_model)

- Scree plots

# Getting proportion of variance for a scree plot

pr.var <- pr.iris$sdev^2

pve <- pr.var / sum(pr.var)

# Plot variance explained for each principal component

plot(pve, xlab = "Principal Component",

ylab = "Proportion of Variance Explained",

ylim = c(0, 1), type = "b")21.3.2.1 Interpreting biplots

HitPoints and Defense have approximately the same loadings in the first two principal components.

Aerodactyl and Avalugg, this two Pokemon are the least similar in terms of the second principal component.

biplot(pr.out)

21.3.2.2 Variance explained

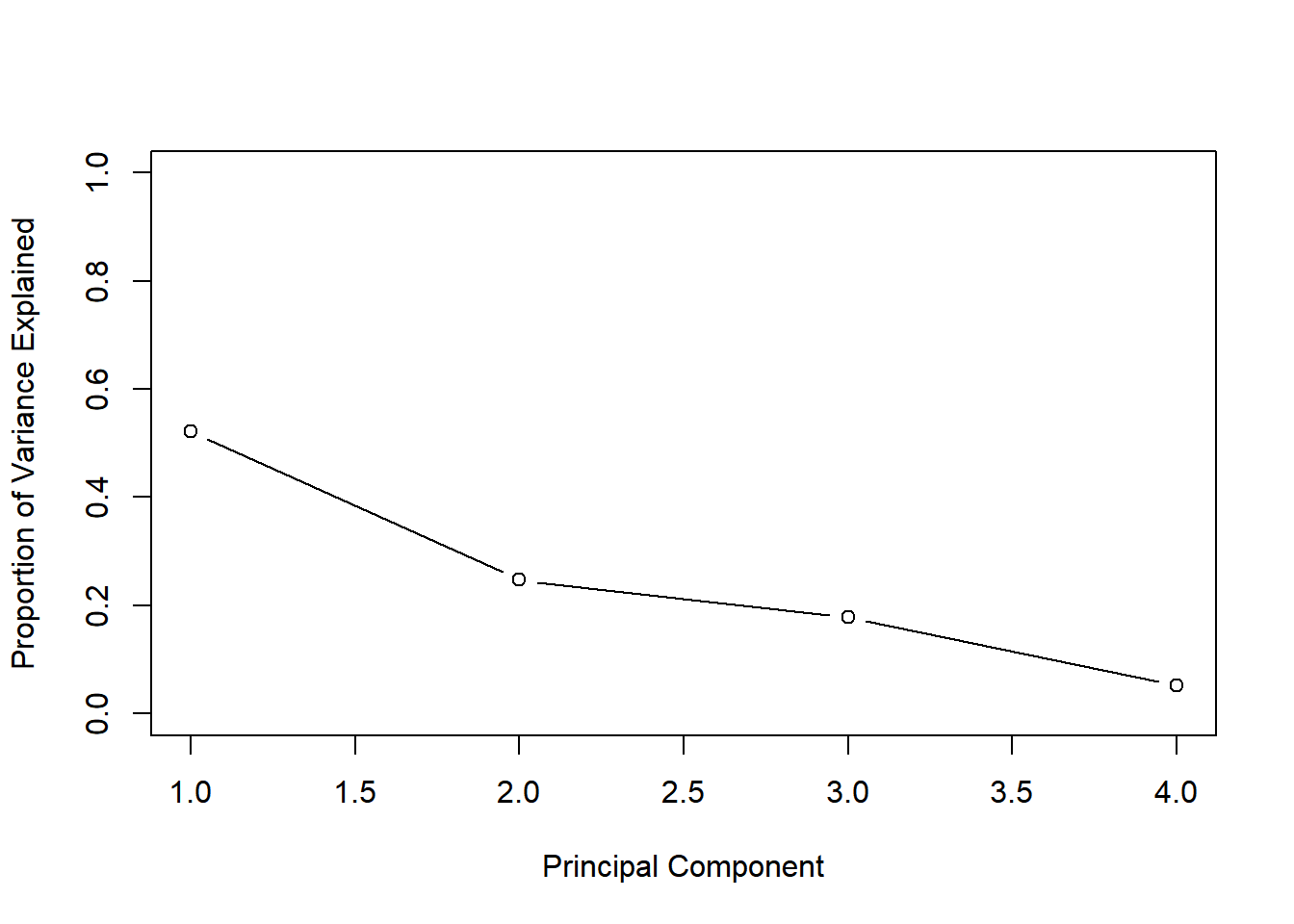

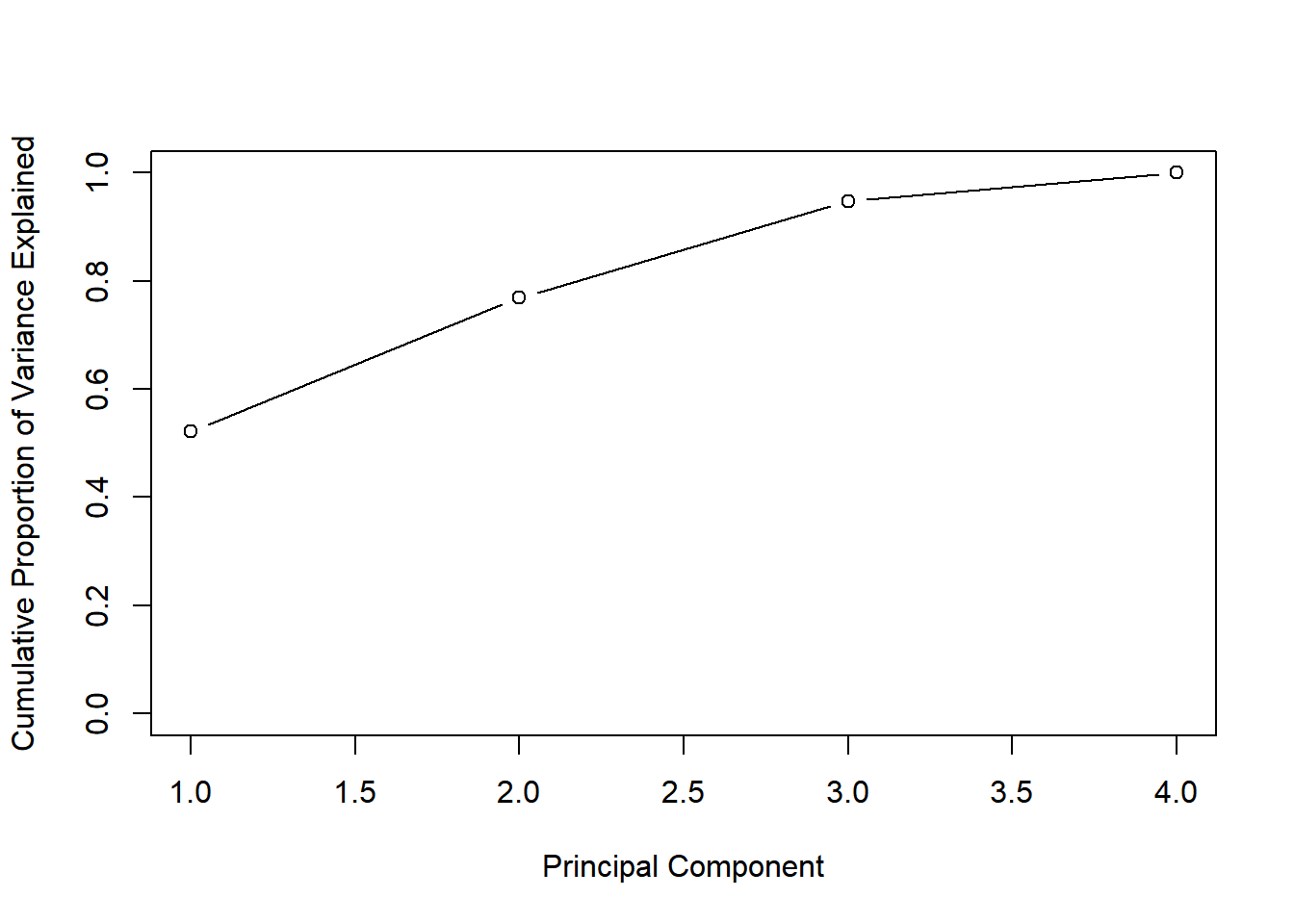

The second common plot type for understanding PCA models is a scree plot. A scree plot shows the variance explained as the number of principal components increases. Sometimes the cumulative variance explained is plotted as well.

Square of the standard deviations of the principal components (i.e., the variance), is available in the sdev component of the PCA model object.

# Variability of each principal component: pr.var

pr.var <- pr.out$sdev^2

pr.var## [1] 2.089 0.988 0.714 0.209The proportion of the variance explained, calculated by dividing pr.var by the total variance explained by all principal components.

# Variance explained by each principal component: pve

pve <- pr.var / sum(pr.var)

pve## [1] 0.5222 0.2471 0.1785 0.052121.3.2.3 Visualize variance explained

Now you will create a scree plot showing the proportion of variance explained by each principal component, as well as the cumulative proportion of variance explained.

One way to determine the number of principal components to retain is by looking for an elbow in the scree plot showing that as the number of principal components increases, the rate at which variance is explained decreases substantially.

# Plot variance explained for each principal component

plot(pve, xlab = "Principal Component",

ylab = "Proportion of Variance Explained",

ylim = c(0, 1), type = "b")

cumsum() for cumulative proportion of variance.

# Plot cumulative proportion of variance explained

plot(cumsum(pve), xlab = "Principal Component",

ylab = "Cumulative Proportion of Variance Explained",

ylim = c(0, 1), type = "b")

Notice that when the number of principal components is equal to the number of original features in the data, the cumulative proportion of variance explained is 1.

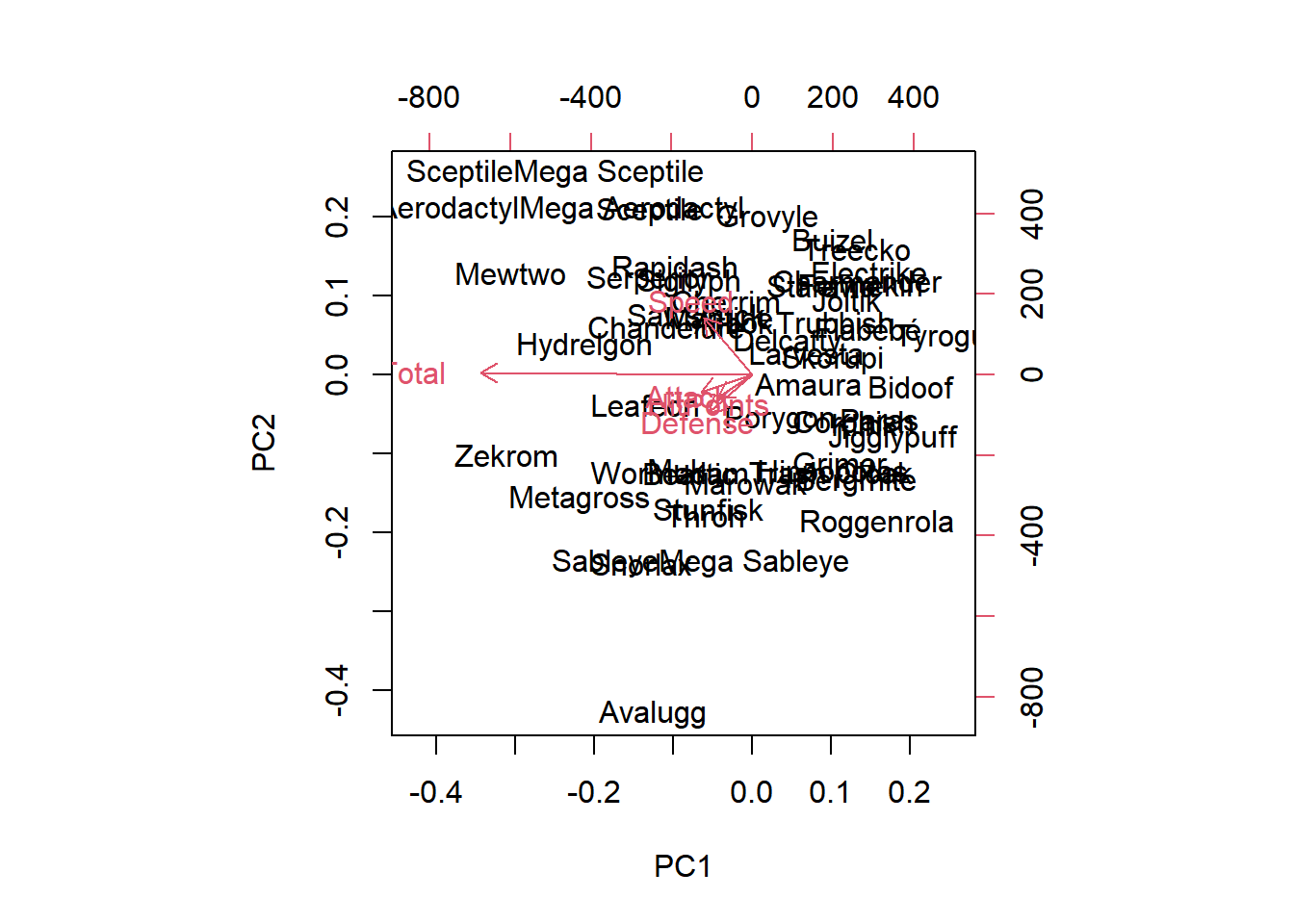

21.3.3 Practical issues

21.3.3.1 Scaling

Scaling your data before doing PCA changes the results of the PCA modeling. Here, you will perform PCA with and without scaling, then visualize the results using biplots.

Sometimes scaling is appropriate when the variances of the variables are substantially different. This is commonly the case when variables have different units of measurement.

# add new variable "Total" for pokemon data

pokemon_total <- read_delim("data/pokemon_add_total.txt")

pokemon_total## # A tibble: 50 × 6

## ...1 Total HitPoints Attack Defense Speed

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 Stunfisk 471 109 66 84 32

## 2 Mewtwo 680 106 110 90 130

## 3 Charmander 309 39 52 43 65

## 4 Grimer 325 80 80 50 25

## 5 Roggenrola 280 55 75 85 15

## 6 Larvesta 360 55 85 55 60

## 7 Metagross 600 80 135 130 70

## 8 Delcatty 380 70 65 65 70

## 9 Avalugg 514 95 117 184 28

## 10 Marowak 425 60 80 110 45

## # ℹ 40 more rows# set up pokemon

pokemon <- pokemon_total %>% select(-1) %>% as.matrix()

row.names(pokemon) <- pokemon_total$...1

head(pokemon)## Total HitPoints Attack Defense Speed

## Stunfisk 471 109 66 84 32

## Mewtwo 680 106 110 90 130

## Charmander 309 39 52 43 65

## Grimer 325 80 80 50 25

## Roggenrola 280 55 75 85 15

## Larvesta 360 55 85 55 60See how the scale of the variables differs. You can see that Total column has larger mean and sd.

# Mean of each variable

colMeans(pokemon)## Total HitPoints Attack Defense Speed

## 419.3 68.4 76.5 73.1 64.2# Standard deviation of each variable

apply(pokemon, 2, sd)## Total HitPoints Attack Defense Speed

## 119.0 25.7 28.0 30.1 33.7# PCA model with scaling: pr.with.scaling

pr.with.scaling <- prcomp(pokemon, scale = TRUE)

# PCA model without scaling: pr.without.scaling

pr.without.scaling <- prcomp(pokemon, scale = FALSE)

# Create biplots of both for comparison

biplot(pr.with.scaling)

biplot(pr.without.scaling)

The new Total column contains much more variation, on average, than the other four columns, so it has a disproportionate effect on the PCA model when scaling is not performed. After scaling the data, there’s a much more even distribution of the loading vectors.

21.4 Case study

21.4.1 Introduction

Example use case

Human breast mass data:

Ten features measured of each cell nuclei

Summary information is provided for each group of cells

Includes diagnosis: benign (not cancerous) and malignant (cancerous)

Analysis

Download data and prepare data for modeling

Exploratory data analysis (# observations, # features, etc.)

Perform PCA and interpret results

Complete two types of clustering

Understand and compare the two types

Combine PCA and clustering

21.4.1.1 Preparing the data

# load dataset

wisc.df <- read.csv("data/WisconsinCancer.csv")

glimpse(wisc.df)## Rows: 569

## Columns: 33

## $ id <int> 842302, 842517, 84300903, 84348301, 84358402, …

## $ diagnosis <chr> "M", "M", "M", "M", "M", "M", "M", "M", "M", "…

## $ radius_mean <dbl> 18.0, 20.6, 19.7, 11.4, 20.3, 12.4, 18.2, 13.7…

## $ texture_mean <dbl> 10.4, 17.8, 21.2, 20.4, 14.3, 15.7, 20.0, 20.8…

## $ perimeter_mean <dbl> 122.8, 132.9, 130.0, 77.6, 135.1, 82.6, 119.6,…

## $ area_mean <dbl> 1001, 1326, 1203, 386, 1297, 477, 1040, 578, 5…

## $ smoothness_mean <dbl> 0.1184, 0.0847, 0.1096, 0.1425, 0.1003, 0.1278…

## $ compactness_mean <dbl> 0.2776, 0.0786, 0.1599, 0.2839, 0.1328, 0.1700…

## $ concavity_mean <dbl> 0.3001, 0.0869, 0.1974, 0.2414, 0.1980, 0.1578…

## $ concave.points_mean <dbl> 0.1471, 0.0702, 0.1279, 0.1052, 0.1043, 0.0809…

## $ symmetry_mean <dbl> 0.242, 0.181, 0.207, 0.260, 0.181, 0.209, 0.17…

## $ fractal_dimension_mean <dbl> 0.0787, 0.0567, 0.0600, 0.0974, 0.0588, 0.0761…

## $ radius_se <dbl> 1.095, 0.543, 0.746, 0.496, 0.757, 0.335, 0.44…

## $ texture_se <dbl> 0.905, 0.734, 0.787, 1.156, 0.781, 0.890, 0.77…

## $ perimeter_se <dbl> 8.59, 3.40, 4.58, 3.44, 5.44, 2.22, 3.18, 3.86…

## $ area_se <dbl> 153.4, 74.1, 94.0, 27.2, 94.4, 27.2, 53.9, 51.…

## $ smoothness_se <dbl> 0.00640, 0.00522, 0.00615, 0.00911, 0.01149, 0…

## $ compactness_se <dbl> 0.04904, 0.01308, 0.04006, 0.07458, 0.02461, 0…

## $ concavity_se <dbl> 0.0537, 0.0186, 0.0383, 0.0566, 0.0569, 0.0367…

## $ concave.points_se <dbl> 0.01587, 0.01340, 0.02058, 0.01867, 0.01885, 0…

## $ symmetry_se <dbl> 0.0300, 0.0139, 0.0225, 0.0596, 0.0176, 0.0216…

## $ fractal_dimension_se <dbl> 0.00619, 0.00353, 0.00457, 0.00921, 0.00511, 0…

## $ radius_worst <dbl> 25.4, 25.0, 23.6, 14.9, 22.5, 15.5, 22.9, 17.1…

## $ texture_worst <dbl> 17.3, 23.4, 25.5, 26.5, 16.7, 23.8, 27.7, 28.1…

## $ perimeter_worst <dbl> 184.6, 158.8, 152.5, 98.9, 152.2, 103.4, 153.2…

## $ area_worst <dbl> 2019, 1956, 1709, 568, 1575, 742, 1606, 897, 7…

## $ smoothness_worst <dbl> 0.162, 0.124, 0.144, 0.210, 0.137, 0.179, 0.14…

## $ compactness_worst <dbl> 0.666, 0.187, 0.424, 0.866, 0.205, 0.525, 0.25…

## $ concavity_worst <dbl> 0.7119, 0.2416, 0.4504, 0.6869, 0.4000, 0.5355…

## $ concave.points_worst <dbl> 0.2654, 0.1860, 0.2430, 0.2575, 0.1625, 0.1741…

## $ symmetry_worst <dbl> 0.460, 0.275, 0.361, 0.664, 0.236, 0.399, 0.30…

## $ fractal_dimension_worst <dbl> 0.1189, 0.0890, 0.0876, 0.1730, 0.0768, 0.1244…

## $ X <lgl> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA…# Convert the features of the data: wisc.data

wisc.data <- as.matrix(wisc.df[, 3:32])

head(wisc.data, 2)## radius_mean texture_mean perimeter_mean area_mean smoothness_mean

## [1,] 18.0 10.4 123 1001 0.1184

## [2,] 20.6 17.8 133 1326 0.0847

## compactness_mean concavity_mean concave.points_mean symmetry_mean

## [1,] 0.2776 0.3001 0.1471 0.242

## [2,] 0.0786 0.0869 0.0702 0.181

## fractal_dimension_mean radius_se texture_se perimeter_se area_se

## [1,] 0.0787 1.095 0.905 8.59 153.4

## [2,] 0.0567 0.543 0.734 3.40 74.1

## smoothness_se compactness_se concavity_se concave.points_se symmetry_se

## [1,] 0.00640 0.0490 0.0537 0.0159 0.0300

## [2,] 0.00522 0.0131 0.0186 0.0134 0.0139

## fractal_dimension_se radius_worst texture_worst perimeter_worst area_worst

## [1,] 0.00619 25.4 17.3 185 2019

## [2,] 0.00353 25.0 23.4 159 1956

## smoothness_worst compactness_worst concavity_worst concave.points_worst

## [1,] 0.162 0.666 0.712 0.265

## [2,] 0.124 0.187 0.242 0.186

## symmetry_worst fractal_dimension_worst

## [1,] 0.460 0.119

## [2,] 0.275 0.089# Set the row names of wisc.data

row.names(wisc.data) <- wisc.df$id

head(wisc.data, 2)## radius_mean texture_mean perimeter_mean area_mean smoothness_mean

## 842302 18.0 10.4 123 1001 0.1184

## 842517 20.6 17.8 133 1326 0.0847

## compactness_mean concavity_mean concave.points_mean symmetry_mean

## 842302 0.2776 0.3001 0.1471 0.242

## 842517 0.0786 0.0869 0.0702 0.181

## fractal_dimension_mean radius_se texture_se perimeter_se area_se

## 842302 0.0787 1.095 0.905 8.59 153.4

## 842517 0.0567 0.543 0.734 3.40 74.1

## smoothness_se compactness_se concavity_se concave.points_se symmetry_se

## 842302 0.00640 0.0490 0.0537 0.0159 0.0300

## 842517 0.00522 0.0131 0.0186 0.0134 0.0139

## fractal_dimension_se radius_worst texture_worst perimeter_worst

## 842302 0.00619 25.4 17.3 185

## 842517 0.00353 25.0 23.4 159

## area_worst smoothness_worst compactness_worst concavity_worst

## 842302 2019 0.162 0.666 0.712

## 842517 1956 0.124 0.187 0.242

## concave.points_worst symmetry_worst fractal_dimension_worst

## 842302 0.265 0.460 0.119

## 842517 0.186 0.275 0.089# Create diagnosis vector, 1 = malignant ("M"), 0

diagnosis <- as.numeric(wisc.df$diagnosis == "M")

str(diagnosis)## num [1:569] 1 1 1 1 1 1 1 1 1 1 ...21.4.1.2 Exploratory data analysis

How many observations are in this dataset?

# ans: 569

dim(wisc.data)## [1] 569 30How many variables/features in the data are suffixed with _mean?

# detect string pattern

str_detect(colnames(wisc.data), "_mean") %>% sum()## [1] 10How many of the observations have a malignant diagnosis?

# malignant diagnosis = 1

sum(diagnosis)## [1] 21221.4.1.3 Performing PCA

The next step in your analysis is to perform PCA on wisc.data.

It’s important to check if the data need to be scaled before performing PCA. Recall two common reasons for scaling data:

The input variables use different units of measurement.

The input variables have significantly different variances.

# Check column means

colMeans(wisc.data)## radius_mean texture_mean perimeter_mean

## 14.12729 19.28965 91.96903

## area_mean smoothness_mean compactness_mean

## 654.88910 0.09636 0.10434

## concavity_mean concave.points_mean symmetry_mean

## 0.08880 0.04892 0.18116

## fractal_dimension_mean radius_se texture_se

## 0.06280 0.40517 1.21685

## perimeter_se area_se smoothness_se

## 2.86606 40.33708 0.00704

## compactness_se concavity_se concave.points_se

## 0.02548 0.03189 0.01180

## symmetry_se fractal_dimension_se radius_worst

## 0.02054 0.00379 16.26919

## texture_worst perimeter_worst area_worst

## 25.67722 107.26121 880.58313

## smoothness_worst compactness_worst concavity_worst

## 0.13237 0.25427 0.27219

## concave.points_worst symmetry_worst fractal_dimension_worst

## 0.11461 0.29008 0.08395# Check standard deviations

apply(wisc.data, 2, sd)## radius_mean texture_mean perimeter_mean

## 3.52405 4.30104 24.29898

## area_mean smoothness_mean compactness_mean

## 351.91413 0.01406 0.05281

## concavity_mean concave.points_mean symmetry_mean

## 0.07972 0.03880 0.02741

## fractal_dimension_mean radius_se texture_se

## 0.00706 0.27731 0.55165

## perimeter_se area_se smoothness_se

## 2.02185 45.49101 0.00300

## compactness_se concavity_se concave.points_se

## 0.01791 0.03019 0.00617

## symmetry_se fractal_dimension_se radius_worst

## 0.00827 0.00265 4.83324

## texture_worst perimeter_worst area_worst

## 6.14626 33.60254 569.35699

## smoothness_worst compactness_worst concavity_worst

## 0.02283 0.15734 0.20862

## concave.points_worst symmetry_worst fractal_dimension_worst

## 0.06573 0.06187 0.01806# Execute PCA, scaling if appropriate: wisc.pr

wisc.pr <- prcomp(wisc.data, scale = TRUE)

# Look at summary of results

summary(wisc.pr)## Importance of components:

## PC1 PC2 PC3 PC4 PC5 PC6 PC7 PC8

## Standard deviation 3.644 2.386 1.6787 1.407 1.284 1.0988 0.8217 0.6904

## Proportion of Variance 0.443 0.190 0.0939 0.066 0.055 0.0403 0.0225 0.0159

## Cumulative Proportion 0.443 0.632 0.7264 0.792 0.847 0.8876 0.9101 0.9260

## PC9 PC10 PC11 PC12 PC13 PC14 PC15

## Standard deviation 0.6457 0.5922 0.5421 0.51104 0.49128 0.39624 0.30681

## Proportion of Variance 0.0139 0.0117 0.0098 0.00871 0.00805 0.00523 0.00314

## Cumulative Proportion 0.9399 0.9516 0.9614 0.97007 0.97812 0.98335 0.98649

## PC16 PC17 PC18 PC19 PC20 PC21 PC22

## Standard deviation 0.28260 0.24372 0.22939 0.22244 0.17652 0.173 0.16565

## Proportion of Variance 0.00266 0.00198 0.00175 0.00165 0.00104 0.001 0.00091

## Cumulative Proportion 0.98915 0.99113 0.99288 0.99453 0.99557 0.997 0.99749

## PC23 PC24 PC25 PC26 PC27 PC28 PC29

## Standard deviation 0.15602 0.1344 0.12442 0.09043 0.08307 0.03987 0.02736

## Proportion of Variance 0.00081 0.0006 0.00052 0.00027 0.00023 0.00005 0.00002

## Cumulative Proportion 0.99830 0.9989 0.99942 0.99969 0.99992 0.99997 1.00000

## PC30

## Standard deviation 0.0115

## Proportion of Variance 0.0000

## Cumulative Proportion 1.000021.4.1.4 Interpreting PCA results

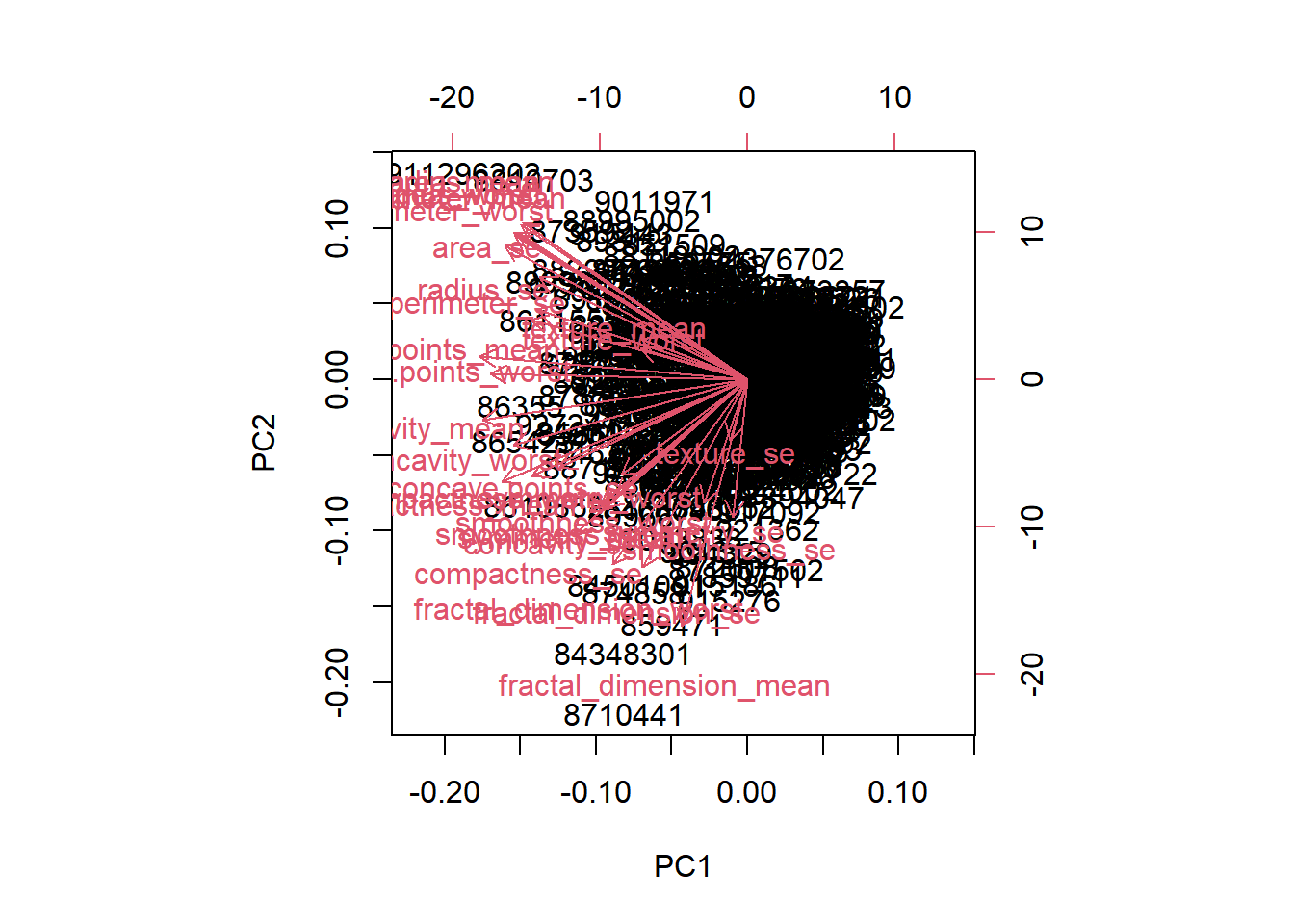

Now you’ll use some visualizations to better understand your PCA model.

What stands out to you about this plot? Is it easy or difficult to understand?

# Create a biplot of wisc.pr

biplot(wisc.pr)

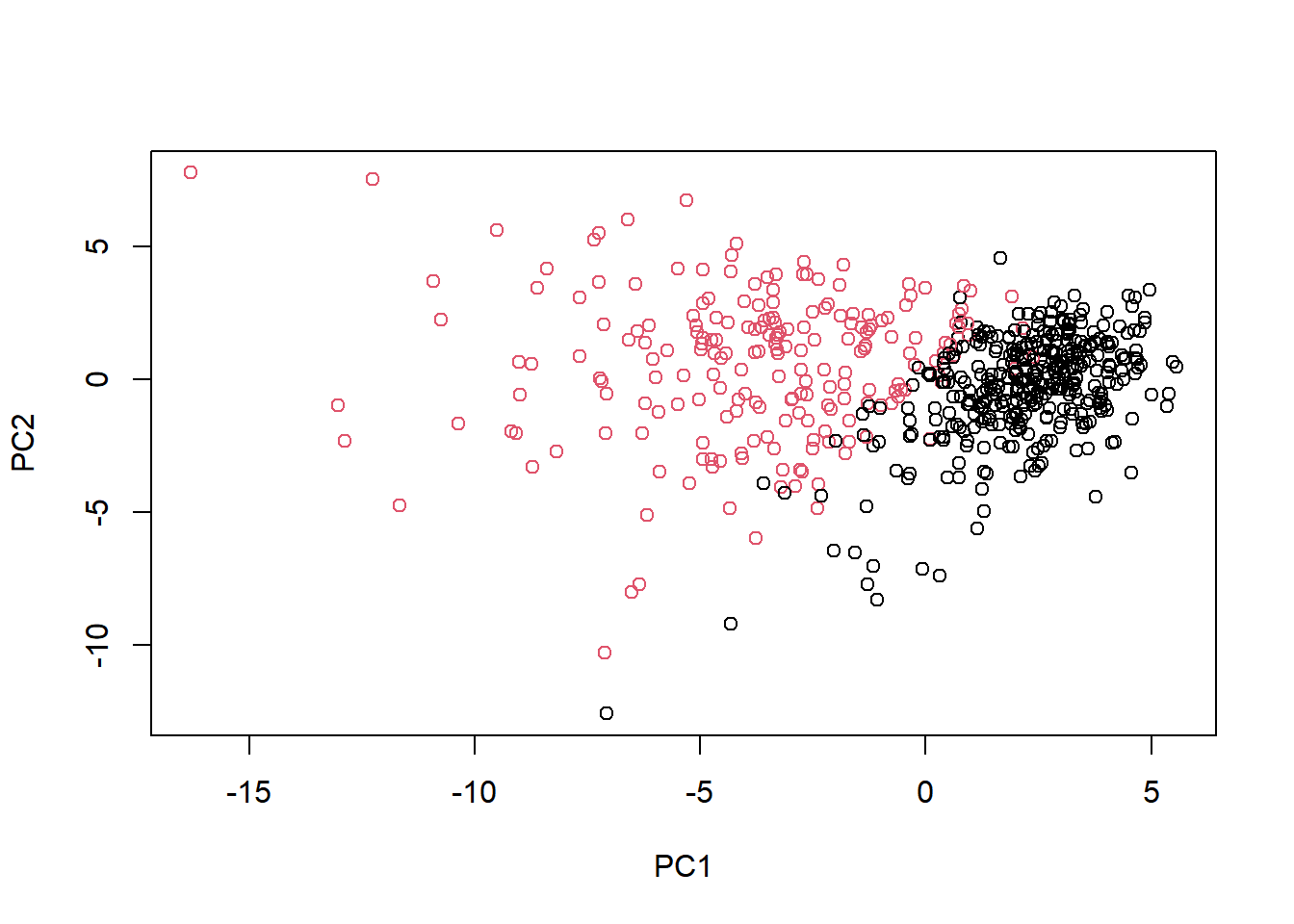

Scatter plot each observation by principal components 1 and 2, coloring the points by the diagnosis.

# Scatter plot observations by components 1 and 2

plot(wisc.pr$x[, c(1, 2)], col = (diagnosis + 1),

xlab = "PC1", ylab = "PC2")

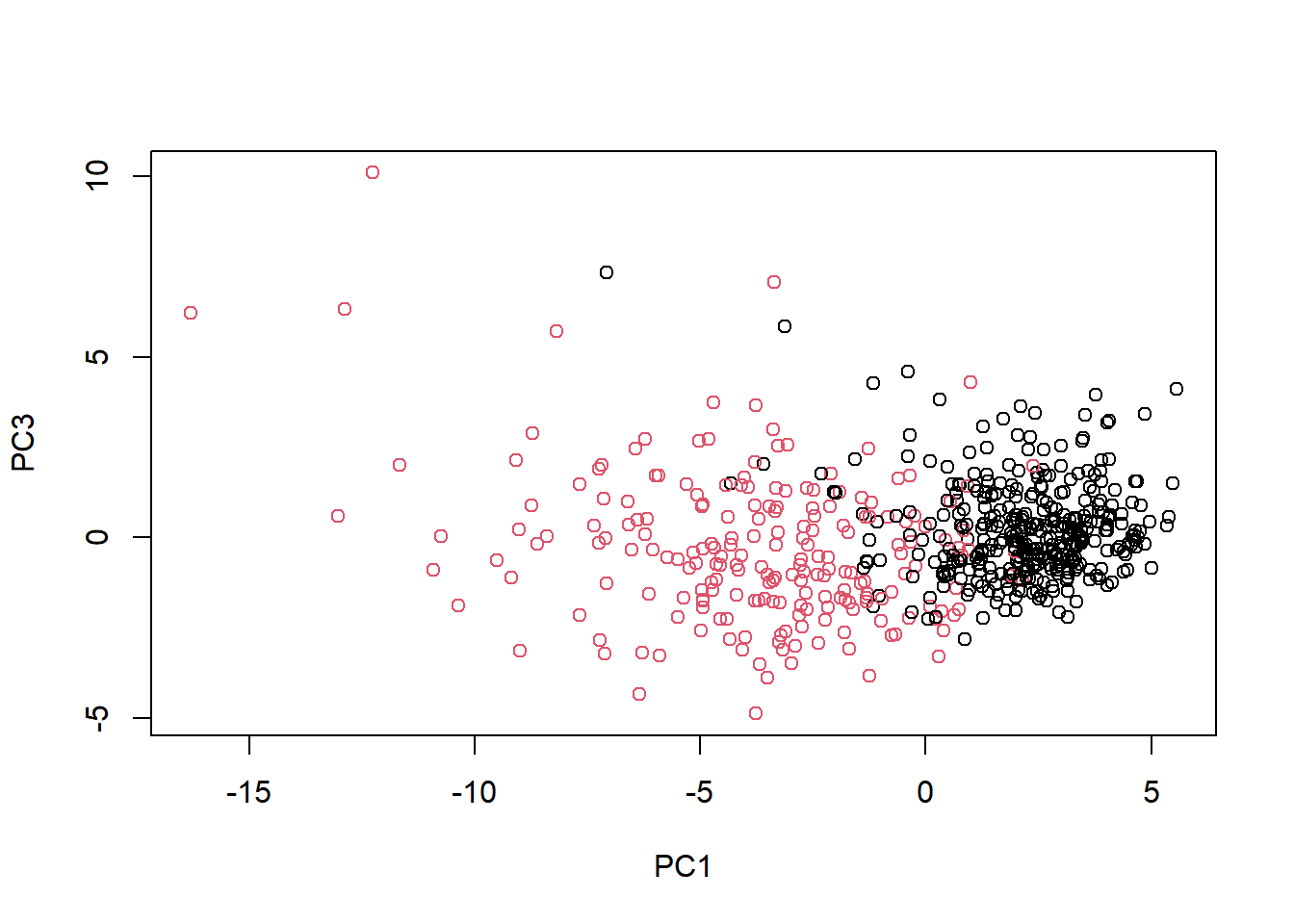

Repeat the same for principal components 1 and 3. What do you notice about these plots?

# Repeat for components 1 and 3

plot(wisc.pr$x[, c(1, 3)], col = (diagnosis + 1),

xlab = "PC1", ylab = "PC3")

Because principal component 2 explains more variance in the original data than principal component 3, you can see that the first plot has a cleaner cut separating the two subgroups.

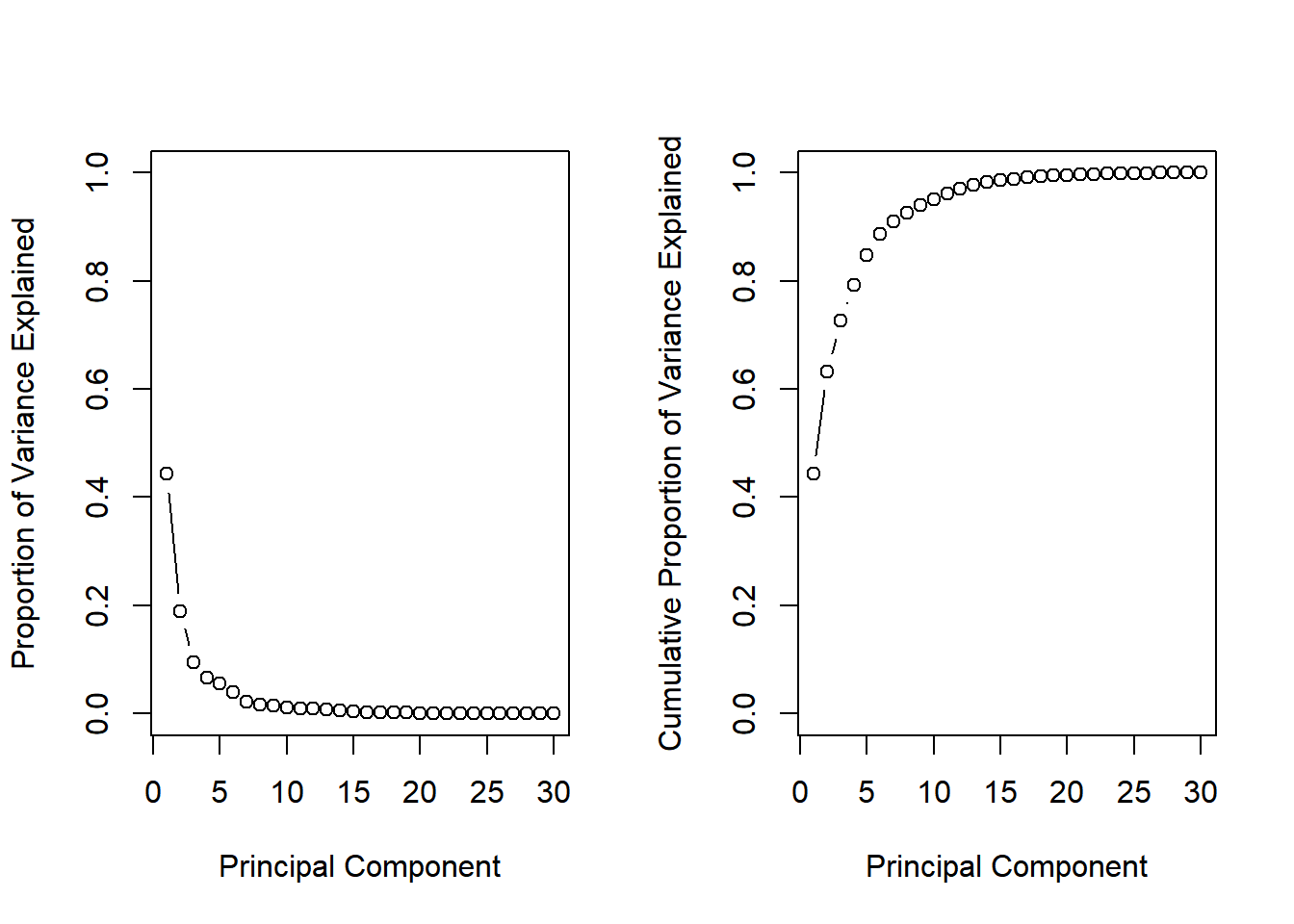

21.4.1.5 Variance explained

You will produce scree plots showing the proportion of variance explained as the number of principal components increases.

# Set up 1 x 2 plotting grid

par(mfrow = c(1, 2))

# Calculate variability of each component

pr.var <- wisc.pr$sdev^2

# Variance explained by each principal component: pve

pve <- pr.var / sum(pr.var)

# Plot variance explained for each principal component

plot(pve, xlab = "Principal Component",

ylab = "Proportion of Variance Explained",

ylim = c(0, 1), type = "b")

# Plot cumulative proportion of variance explained

plot(cumsum(pve), xlab = "Principal Component",

ylab = "Cumulative Proportion of Variance Explained",

ylim = c(0, 1), type = "b")

What is the minimum number of principal components needed to explain 80% of the variance in the data?

21.4.2 Next steps

Complete hierarchical clustering

Complete k-means clustering

Combine PCA and clustering

Contrast results of hierarchical clustering with diagnosis

Compare hierarchical and k-means clustering results

PCA as a pre-processing step for clustering

21.4.2.1 Communicating PCA results

The loadings, represented as vectors, explain the mapping from the original features to the principal components. The principal components are naturally ordered from the most variance explained to the least variance explained.

For the first principal component, what is the component of the loading vector for the feature concave.points_mean?

# -0.26085376

wisc.pr$rotation[,"PC1"]## radius_mean texture_mean perimeter_mean

## -0.2189 -0.1037 -0.2275

## area_mean smoothness_mean compactness_mean

## -0.2210 -0.1426 -0.2393

## concavity_mean concave.points_mean symmetry_mean

## -0.2584 -0.2609 -0.1382

## fractal_dimension_mean radius_se texture_se

## -0.0644 -0.2060 -0.0174

## perimeter_se area_se smoothness_se

## -0.2113 -0.2029 -0.0145

## compactness_se concavity_se concave.points_se

## -0.1704 -0.1536 -0.1834

## symmetry_se fractal_dimension_se radius_worst

## -0.0425 -0.1026 -0.2280

## texture_worst perimeter_worst area_worst

## -0.1045 -0.2366 -0.2249

## smoothness_worst compactness_worst concavity_worst

## -0.1280 -0.2101 -0.2288

## concave.points_worst symmetry_worst fractal_dimension_worst

## -0.2509 -0.1229 -0.1318What is the minimum number of principal components required to explain 80% of the variance of the data?

# PC5 Cumulative Proportion = 0.84734 & screen plot

summary(wisc.pr)## Importance of components:

## PC1 PC2 PC3 PC4 PC5 PC6 PC7 PC8

## Standard deviation 3.644 2.386 1.6787 1.407 1.284 1.0988 0.8217 0.6904

## Proportion of Variance 0.443 0.190 0.0939 0.066 0.055 0.0403 0.0225 0.0159

## Cumulative Proportion 0.443 0.632 0.7264 0.792 0.847 0.8876 0.9101 0.9260

## PC9 PC10 PC11 PC12 PC13 PC14 PC15

## Standard deviation 0.6457 0.5922 0.5421 0.51104 0.49128 0.39624 0.30681

## Proportion of Variance 0.0139 0.0117 0.0098 0.00871 0.00805 0.00523 0.00314

## Cumulative Proportion 0.9399 0.9516 0.9614 0.97007 0.97812 0.98335 0.98649

## PC16 PC17 PC18 PC19 PC20 PC21 PC22

## Standard deviation 0.28260 0.24372 0.22939 0.22244 0.17652 0.173 0.16565

## Proportion of Variance 0.00266 0.00198 0.00175 0.00165 0.00104 0.001 0.00091

## Cumulative Proportion 0.98915 0.99113 0.99288 0.99453 0.99557 0.997 0.99749

## PC23 PC24 PC25 PC26 PC27 PC28 PC29

## Standard deviation 0.15602 0.1344 0.12442 0.09043 0.08307 0.03987 0.02736

## Proportion of Variance 0.00081 0.0006 0.00052 0.00027 0.00023 0.00005 0.00002

## Cumulative Proportion 0.99830 0.9989 0.99942 0.99969 0.99992 0.99997 1.00000

## PC30

## Standard deviation 0.0115

## Proportion of Variance 0.0000

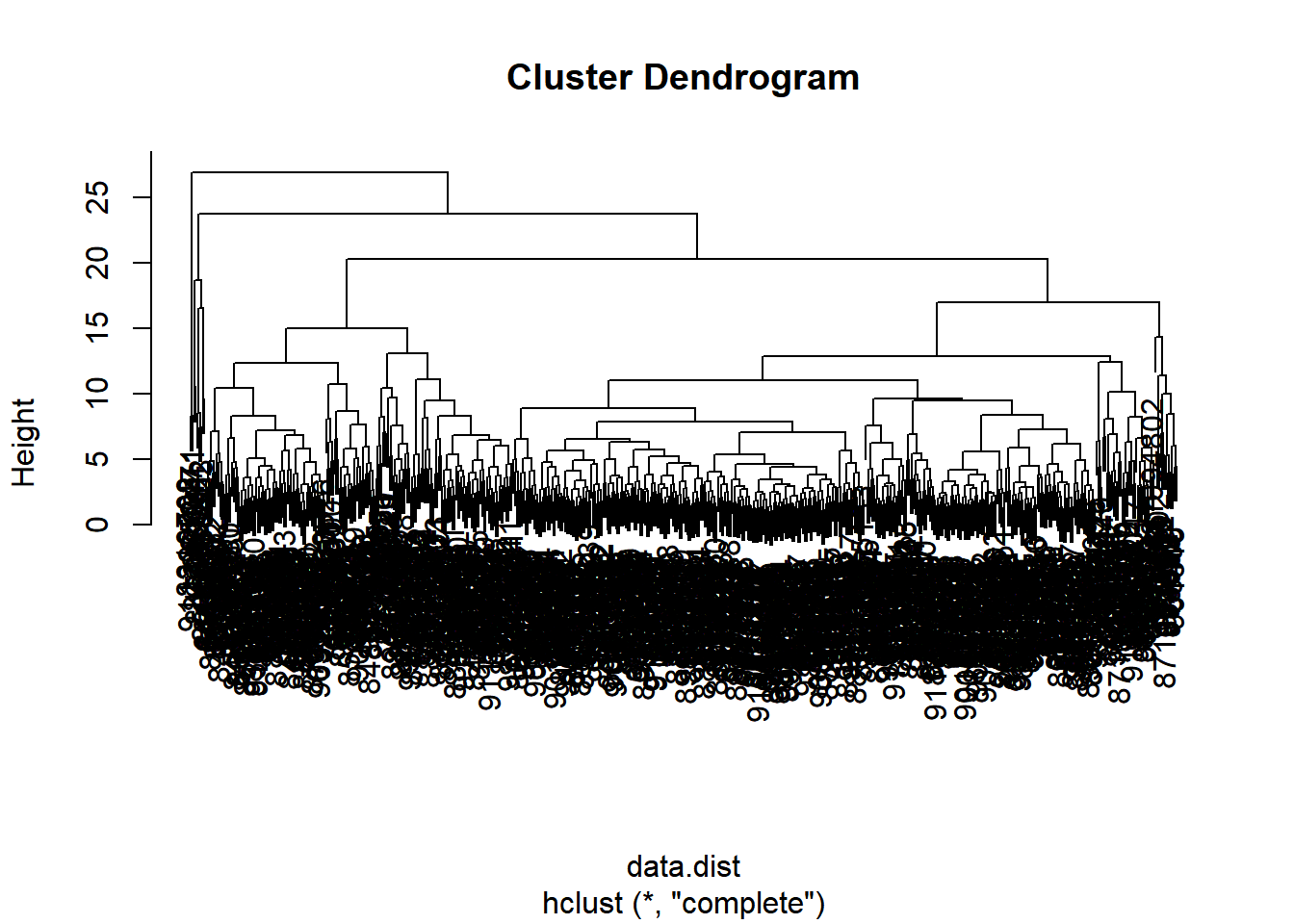

## Cumulative Proportion 1.000021.4.2.2 Hierarchical clustering

# Scale the wisc.data data: data.scaled

data.scaled <- scale(wisc.data)

# Calculate the (Euclidean) distances: data.dist

data.dist <- dist(data.scaled)

# Create a hierarchical clustering model: wisc.hclust

wisc.hclust <- hclust(data.dist, method = "complete")

# plot

plot(wisc.hclust)

What is the height at which the clustering model has 4 clusters? ANS: 20

21.4.2.3 Selecting number of clusters

You will compare the outputs from your hierarchical clustering model to the actual diagnoses. Normally when performing unsupervised learning like this, a target variable isn’t available. We do have it with this dataset, however, so it can be used to check the performance of the clustering model.

When performing supervised learning, using clustering to create new features may or may not improve the performance of the final model. This exercise will help you determine if, in this case, hierarchical clustering provides a promising new feature.

# Cut tree so that it has 4 clusters: wisc.hclust.clusters

wisc.hclust.clusters <- cutree(wisc.hclust, k = 4)

# Compare cluster membership to actual diagnoses

table(wisc.hclust.clusters, diagnosis)## diagnosis

## wisc.hclust.clusters 0 1

## 1 12 165

## 2 2 5

## 3 343 40

## 4 0 221.4.2.4 k-means clustering & compare

You will create a k-means clustering model on the Wisconsin breast cancer data and compare the results to the actual diagnoses and the results of your hierarchical clustering model.

# Create a k-means model on wisc.data: wisc.km

wisc.km <- kmeans(scale(wisc.data), centers = 2, nstart = 20)

# Compare k-means to actual diagnoses

table(wisc.km$cluster, diagnosis)## diagnosis

## 0 1

## 1 343 37

## 2 14 175# Compare k-means to hierarchical clustering

table(wisc.km$cluster, wisc.hclust.clusters)## wisc.hclust.clusters

## 1 2 3 4

## 1 17 0 363 0

## 2 160 7 20 2Looking at the second table you generated, it looks like clusters 1, 2, and 4 from the hierarchical clustering model can be interpreted as the cluster 1 equivalent from the k-means algorithm, and cluster 3 can be interpreted as the cluster 2 equivalent.

21.4.2.5 Clustering on PCA results

You will put together several steps you used earlier and, in doing so, you will experience some of the creativity that is typical in unsupervised learning.

Recall from earlier exercises that the PCA model required significantly fewer features to describe 80% and 95% of the variability of the data. In addition to normalizing data and potentially avoiding overfitting, PCA also uncorrelates the variables, sometimes improving the performance of other modeling techniques.

Let’s see if PCA improves or degrades the performance of hierarchical clustering.

# Using the minimum number of principal components required to describe at least 90% of the variability in the data

# PC7 = 0.91

summary(wisc.pr)## Importance of components:

## PC1 PC2 PC3 PC4 PC5 PC6 PC7 PC8

## Standard deviation 3.644 2.386 1.6787 1.407 1.284 1.0988 0.8217 0.6904

## Proportion of Variance 0.443 0.190 0.0939 0.066 0.055 0.0403 0.0225 0.0159

## Cumulative Proportion 0.443 0.632 0.7264 0.792 0.847 0.8876 0.9101 0.9260

## PC9 PC10 PC11 PC12 PC13 PC14 PC15

## Standard deviation 0.6457 0.5922 0.5421 0.51104 0.49128 0.39624 0.30681

## Proportion of Variance 0.0139 0.0117 0.0098 0.00871 0.00805 0.00523 0.00314

## Cumulative Proportion 0.9399 0.9516 0.9614 0.97007 0.97812 0.98335 0.98649

## PC16 PC17 PC18 PC19 PC20 PC21 PC22

## Standard deviation 0.28260 0.24372 0.22939 0.22244 0.17652 0.173 0.16565

## Proportion of Variance 0.00266 0.00198 0.00175 0.00165 0.00104 0.001 0.00091

## Cumulative Proportion 0.98915 0.99113 0.99288 0.99453 0.99557 0.997 0.99749

## PC23 PC24 PC25 PC26 PC27 PC28 PC29

## Standard deviation 0.15602 0.1344 0.12442 0.09043 0.08307 0.03987 0.02736

## Proportion of Variance 0.00081 0.0006 0.00052 0.00027 0.00023 0.00005 0.00002

## Cumulative Proportion 0.99830 0.9989 0.99942 0.99969 0.99992 0.99997 1.00000

## PC30

## Standard deviation 0.0115

## Proportion of Variance 0.0000

## Cumulative Proportion 1.0000Create a hierarchical clustering model with complete linkage.

# Create a hierarchical clustering model: wisc.pr.hclust

wisc.pr.hclust <- hclust(dist(wisc.pr$x[, 1:7]), method = "complete")

# Cut model into 4 clusters: wisc.pr.hclust.clusters

wisc.pr.hclust.clusters <- cutree(wisc.pr.hclust, k = 4)

# Compare to actual diagnoses

table(wisc.pr.hclust.clusters, diagnosis)## diagnosis

## wisc.pr.hclust.clusters 0 1

## 1 5 113

## 2 350 97

## 3 2 0

## 4 0 2# Compare previous k-means and actual diagnosis

table(wisc.km$cluster, diagnosis)## diagnosis

## 0 1

## 1 343 37

## 2 14 175# Compare previous hierarchical and actual diagnosis

table(wisc.hclust.clusters, diagnosis)## diagnosis

## wisc.hclust.clusters 0 1

## 1 12 165

## 2 2 5

## 3 343 40

## 4 0 2