Chapter 12 Introduction to Writing Functions

12.1 Write a Function

12.1.1 Why write function?

Benefits of writing functions:

Functions eliminate repetition from your code, which

can reduce your workload, and

help avoid errors.

Functions also allow code reuse and sharing.

12.1.2 Convert scripts into functions

Make a template

Paste your script into the body

Choose the arguments

Replace specific values with arguments

Generalize variable names

Remove the final assignment

12.1.2.1 First function

# Your script, from a previous step

# Sample from coin_sides once

coin_sides <- c("head", "tail")

sample(coin_sides, 1)## [1] "tail"# Paste your script into the function body

toss_coin <- function() {

coin_sides <- c("head", "tail")

sample(coin_sides, 1)

}

# Call your function

toss_coin()## [1] "head"12.1.2.2 Inputs to functions

The inputs to functions are called arguments.

# Update the function to return n coin tosses

toss_coin <- function(n_flips) {

coin_sides <- c("head", "tail")

sample(coin_sides, size = n_flips, replace = TRUE)

}

# Generate 10 coin tosses

toss_coin(10)## [1] "head" "head" "tail" "tail" "tail" "tail" "tail" "tail" "tail" "head"12.1.2.3 Multiple inputs to functions

If a function should have more than one argument, list them in the function signature, separated by commas.

Bias the coin by weighting the sampling. Specify the prob argument so that heads are sampled with probability p_head (and tails are sampled with probability 1 - p_head).

coin_sides <- c("head", "tail")

n_flips <- 10

p_head <- 0.8

# Define a vector of weights

weights <- c(p_head, 1 - p_head)

# Update so that heads are sampled with prob p_head

sample(coin_sides, n_flips, replace = TRUE, prob = weights)## [1] "head" "head" "head" "head" "head" "head" "head" "head" "head" "head"Update the definition of toss_coin() so it accepts an argument, p_head, and weights the samples using the code you wrote in the previous step.

# Update the function so heads have probability p_head

toss_coin <- function(n_flips, p_head) {

coin_sides <- c("head", "tail")

# Define a vector of weights

weights <- c(p_head, 1 - p_head)

# Modify the sampling to be weighted

sample(coin_sides, n_flips, replace = TRUE, prob = weights)

}

# Generate 10 coin tosses with an 80% chance of each head

toss_coin(10, 0.8)## [1] "head" "head" "head" "head" "tail" "head" "head" "head" "tail" "head"12.1.3 Readable code

Function names should contain a verb

Readability vs. typeability

Understanding code >> typing code

Code editors have autocomplete

You can alias common functions

Types of argument

Data arguments: what you compute on

Detail arguments: how you perform the computation

Data args should precede detail args

Renaming GLM

R’s generalized linear regression function, glm(), suffers the same usability problems as lm(): its name is an acronym, and its formula and data arguments are in the wrong order.

To solve this exercise, you need to know two things about generalized linear regression:

glm()formulas are specified likelm()formulas: response is on the left, and explanatory variables are added on the right.To model count data, set

glm()’sfamilyargument topoisson, making it a Poisson regression.

library(tidyverse)

snake_river_visits <- read_rds("data/snake_river_visits.rds")

# Run a generalized linear regression

glm(

# Model no. of visits vs. gender, income, travel

n_visits ~ gender + income + travel,

# Use the snake_river_visits dataset

data = snake_river_visits,

# Make it a Poisson regression

family = poisson

)##

## Call: glm(formula = n_visits ~ gender + income + travel, family = poisson,

## data = snake_river_visits)

##

## Coefficients:

## (Intercept) genderfemale income($25k,$55k] income($55k,$95k]

## 4.0864 0.3740 -0.0199 -0.5807

## income($95k,$Inf) travel(0.25h,4h] travel(4h,Infh)

## -0.5782 -0.6271 -2.4230

##

## Degrees of Freedom: 345 Total (i.e. Null); 339 Residual

## (64 observations deleted due to missingness)

## Null Deviance: 18850

## Residual Deviance: 11530 AIC: 12860Define a function, run_poisson_regression(), to run a Poisson regression. This should take two arguments: data and formula, and call glm() .

# Write a function to run a Poisson regression

run_poisson_regression <- function(data, formula) {

glm(formula, data, family = poisson)

}# Re-run the Poisson regression, using your function

model <- snake_river_visits %>%

run_poisson_regression(n_visits ~ gender + income + travel)

# Run this to see the predictions

# snake_river_explanatory %>%

# mutate(predicted_n_visits = predict(model, ., type = "response"))%>%

# arrange(desc(predicted_n_visits))12.2 All About Arguments

12.2.1 Default arguments

12.2.1.1 Numeric defaults

Only set defaults for numeric detail arguments, not data arguments.

cut_by_quantile() converts a numeric vector into a categorical variable where quantiles define the cut points.

By specifying default arguments, you can make it easier to use. Let’s start with n, which specifies how many categories to cut x into.

# A numeric vector of the number of visits to Snake River

n_visits <- snake_river_visits$n_visits

# Set the default for n to 5

cut_by_quantile <- function(x, n = 5, na.rm, labels, interval_type) {

probs <- seq(0, 1, length.out = n + 1)

qtiles <- quantile(x, probs, na.rm = na.rm, names = FALSE)

right <- switch(interval_type, "(lo, hi]" = TRUE, "[lo, hi)" = FALSE)

cut(x, qtiles, labels = labels, right = right, include.lowest = TRUE)

}

# Remove the n argument from the call

cut_by_quantile(

n_visits,

na.rm = FALSE,

labels = c("very low", "low", "medium", "high", "very high"),

interval_type = "(lo, hi]"

)## [1] very low very low very low very low very low very low very low

## [8] very low very low very low very low very low very low very low

## [15] very low very low very low very low very low high very high

## [22] high very low medium low very low very low very low

## [29] very low very low very low very high very high very high very high

## [36] very high high very high very high very high very high very high

## [43] medium very high very high very high medium medium low

## [50] high high high very high very high high high

## [57] very high medium very high high medium high very high

## [64] very high very high very high high high very high high

## [71] very low very high high high medium high high

## [78] high medium very high very high very high high high

## [85] high very low very high medium high very high high

## [92] high very high high very low very low medium very low

## [99] medium medium very high medium medium medium high

## [106] low high very high medium very high medium very high

## [113] low very high low very high high very low very low

## [120] very low very low low very low very low very low very low

## [127] very low very low medium very low very low low low

## [134] very low very low low very low very low very low low

## [141] low medium medium medium medium medium very low

## [148] very low low very low low medium very low very low

## [155] very low very low very high high very high high medium

## [162] very high medium very low high medium high high

## [169] very high high high very high very high high very high

## [176] high high medium very high high high high

## [183] very high very high very low high very high high high

## [190] medium very high high very high high very high high

## [197] very high high very high very low high very high very high

## [204] very low very low medium very high medium low medium

## [211] high medium very low medium very high high very high

## [218] high very high high low high medium very high

## [225] medium high high high very low high high

## [232] high very high high medium medium very low very low

## [239] very low very low medium low very low very low very low

## [246] medium high very low very low medium very low very low

## [253] very low very low very low very low very low very low very low

## [260] very low very high medium very low very high medium very high

## [267] medium low very high medium medium medium low

## [274] high medium high very high medium very high very high

## [281] medium medium very high high medium very high high

## [288] medium low very low medium very low very low very low

## [295] very low very low low very low very low very low very low

## [302] very low very low very low very low low very low very low

## [309] very low very low low very low very low low very low

## [316] very low very low very low low very low very low very low

## [323] very low very low low very low very low very low very low

## [330] very low very low very low very low very low very low very low

## [337] very low very low very low very low very low very low very low

## [344] very low very low medium very low very low very low very low

## [351] very low very low very low very low very low very low very low

## [358] very low low very low very low very low very low very low

## [365] very low very low very low very low very low very low low

## [372] very low very low very low very high high very high very high

## [379] very high high very high very high very high very high medium

## [386] medium medium high very high high high high

## [393] high high high high very high high very high

## [400] medium high low high very high low very low

## [407] medium very low medium low

## Levels: very low low medium high very high12.2.1.2 Logical defaults

cut_by_quantile() is now slightly easier to use, but you still always have to specify the na.rm argument. This removes missing values.

Where functions have an argument for removing missing values, the best practice is to not remove them by default (in case you hadn’t spotted that you had missing values). That means that the default for na.rm should be FALSE.

# Set the default for na.rm to FALSE

cut_by_quantile <- function(x, n = 5, na.rm = FALSE, labels, interval_type) {

probs <- seq(0, 1, length.out = n + 1)

qtiles <- quantile(x, probs, na.rm = na.rm, names = FALSE)

right <- switch(interval_type, "(lo, hi]" = TRUE, "[lo, hi)" = FALSE)

cut(x, qtiles, labels = labels, right = right, include.lowest = TRUE)

}

# Remove the na.rm argument from the call

cut_by_quantile(

n_visits,

labels = c("very low", "low", "medium", "high", "very high"),

interval_type = "(lo, hi]"

)## [1] very low very low very low very low very low very low very low

## [8] very low very low very low very low very low very low very low

## [15] very low very low very low very low very low high very high

## [22] high very low medium low very low very low very low

## [29] very low very low very low very high very high very high very high

## [36] very high high very high very high very high very high very high

## [43] medium very high very high very high medium medium low

## [50] high high high very high very high high high

## [57] very high medium very high high medium high very high

## [64] very high very high very high high high very high high

## [71] very low very high high high medium high high

## [78] high medium very high very high very high high high

## [85] high very low very high medium high very high high

## [92] high very high high very low very low medium very low

## [99] medium medium very high medium medium medium high

## [106] low high very high medium very high medium very high

## [113] low very high low very high high very low very low

## [120] very low very low low very low very low very low very low

## [127] very low very low medium very low very low low low

## [134] very low very low low very low very low very low low

## [141] low medium medium medium medium medium very low

## [148] very low low very low low medium very low very low

## [155] very low very low very high high very high high medium

## [162] very high medium very low high medium high high

## [169] very high high high very high very high high very high

## [176] high high medium very high high high high

## [183] very high very high very low high very high high high

## [190] medium very high high very high high very high high

## [197] very high high very high very low high very high very high

## [204] very low very low medium very high medium low medium

## [211] high medium very low medium very high high very high

## [218] high very high high low high medium very high

## [225] medium high high high very low high high

## [232] high very high high medium medium very low very low

## [239] very low very low medium low very low very low very low

## [246] medium high very low very low medium very low very low

## [253] very low very low very low very low very low very low very low

## [260] very low very high medium very low very high medium very high

## [267] medium low very high medium medium medium low

## [274] high medium high very high medium very high very high

## [281] medium medium very high high medium very high high

## [288] medium low very low medium very low very low very low

## [295] very low very low low very low very low very low very low

## [302] very low very low very low very low low very low very low

## [309] very low very low low very low very low low very low

## [316] very low very low very low low very low very low very low

## [323] very low very low low very low very low very low very low

## [330] very low very low very low very low very low very low very low

## [337] very low very low very low very low very low very low very low

## [344] very low very low medium very low very low very low very low

## [351] very low very low very low very low very low very low very low

## [358] very low low very low very low very low very low very low

## [365] very low very low very low very low very low very low low

## [372] very low very low very low very high high very high very high

## [379] very high high very high very high very high very high medium

## [386] medium medium high very high high high high

## [393] high high high high very high high very high

## [400] medium high low high very high low very low

## [407] medium very low medium low

## Levels: very low low medium high very high12.2.1.3 NULL defaults

The cut() function used by cut_by_quantile() can automatically provide sensible labels for each category.

The code to generate these labels is pretty complicated, so rather than appearing in the function signature directly, its labels argument defaults to NULL, and the calculation details are shown on the ?cut help page.

(If you use this capability, make sure to document how the argument behaves in the function’s help page.)

# Set the default for labels to NULL

cut_by_quantile <- function(x, n = 5, na.rm = FALSE, labels = NULL, interval_type) {

probs <- seq(0, 1, length.out = n + 1)

qtiles <- quantile(x, probs, na.rm = na.rm, names = FALSE)

right <- switch(interval_type, "(lo, hi]" = TRUE, "[lo, hi)" = FALSE)

cut(x, qtiles, labels = labels, right = right, include.lowest = TRUE)

}

# Remove the labels argument from the call

cut_by_quantile(

n_visits,

interval_type = "(lo, hi]"

)## [1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [9] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [17] [0,1] [0,1] [0,1] (10,35] (35,350] (10,35] [0,1] (2,10]

## [25] (1,2] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] (35,350]

## [33] (35,350] (35,350] (35,350] (35,350] (10,35] (35,350] (35,350] (35,350]

## [41] (35,350] (35,350] (2,10] (35,350] (35,350] (35,350] (2,10] (2,10]

## [49] (1,2] (10,35] (10,35] (10,35] (35,350] (35,350] (10,35] (10,35]

## [57] (35,350] (2,10] (35,350] (10,35] (2,10] (10,35] (35,350] (35,350]

## [65] (35,350] (35,350] (10,35] (10,35] (35,350] (10,35] [0,1] (35,350]

## [73] (10,35] (10,35] (2,10] (10,35] (10,35] (10,35] (2,10] (35,350]

## [81] (35,350] (35,350] (10,35] (10,35] (10,35] [0,1] (35,350] (2,10]

## [89] (10,35] (35,350] (10,35] (10,35] (35,350] (10,35] [0,1] [0,1]

## [97] (2,10] [0,1] (2,10] (2,10] (35,350] (2,10] (2,10] (2,10]

## [105] (10,35] (1,2] (10,35] (35,350] (2,10] (35,350] (2,10] (35,350]

## [113] (1,2] (35,350] (1,2] (35,350] (10,35] [0,1] [0,1] [0,1]

## [121] [0,1] (1,2] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [129] (2,10] [0,1] [0,1] (1,2] (1,2] [0,1] [0,1] (1,2]

## [137] [0,1] [0,1] [0,1] (1,2] (1,2] (2,10] (2,10] (2,10]

## [145] (2,10] (2,10] [0,1] [0,1] (1,2] [0,1] (1,2] (2,10]

## [153] [0,1] [0,1] [0,1] [0,1] (35,350] (10,35] (35,350] (10,35]

## [161] (2,10] (35,350] (2,10] [0,1] (10,35] (2,10] (10,35] (10,35]

## [169] (35,350] (10,35] (10,35] (35,350] (35,350] (10,35] (35,350] (10,35]

## [177] (10,35] (2,10] (35,350] (10,35] (10,35] (10,35] (35,350] (35,350]

## [185] [0,1] (10,35] (35,350] (10,35] (10,35] (2,10] (35,350] (10,35]

## [193] (35,350] (10,35] (35,350] (10,35] (35,350] (10,35] (35,350] [0,1]

## [201] (10,35] (35,350] (35,350] [0,1] [0,1] (2,10] (35,350] (2,10]

## [209] (1,2] (2,10] (10,35] (2,10] [0,1] (2,10] (35,350] (10,35]

## [217] (35,350] (10,35] (35,350] (10,35] (1,2] (10,35] (2,10] (35,350]

## [225] (2,10] (10,35] (10,35] (10,35] [0,1] (10,35] (10,35] (10,35]

## [233] (35,350] (10,35] (2,10] (2,10] [0,1] [0,1] [0,1] [0,1]

## [241] (2,10] (1,2] [0,1] [0,1] [0,1] (2,10] (10,35] [0,1]

## [249] [0,1] (2,10] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [257] [0,1] [0,1] [0,1] [0,1] (35,350] (2,10] [0,1] (35,350]

## [265] (2,10] (35,350] (2,10] (1,2] (35,350] (2,10] (2,10] (2,10]

## [273] (1,2] (10,35] (2,10] (10,35] (35,350] (2,10] (35,350] (35,350]

## [281] (2,10] (2,10] (35,350] (10,35] (2,10] (35,350] (10,35] (2,10]

## [289] (1,2] [0,1] (2,10] [0,1] [0,1] [0,1] [0,1] [0,1]

## [297] (1,2] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [305] [0,1] (1,2] [0,1] [0,1] [0,1] [0,1] (1,2] [0,1]

## [313] [0,1] (1,2] [0,1] [0,1] [0,1] [0,1] (1,2] [0,1]

## [321] [0,1] [0,1] [0,1] [0,1] (1,2] [0,1] [0,1] [0,1]

## [329] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [337] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [345] [0,1] (2,10] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [353] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] (1,2] [0,1]

## [361] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [369] [0,1] [0,1] (1,2] [0,1] [0,1] [0,1] (35,350] (10,35]

## [377] (35,350] (35,350] (35,350] (10,35] (35,350] (35,350] (35,350] (35,350]

## [385] (2,10] (2,10] (2,10] (10,35] (35,350] (10,35] (10,35] (10,35]

## [393] (10,35] (10,35] (10,35] (10,35] (35,350] (10,35] (35,350] (2,10]

## [401] (10,35] (1,2] (10,35] (35,350] (1,2] [0,1] (2,10] [0,1]

## [409] (2,10] (1,2]

## Levels: [0,1] (1,2] (2,10] (10,35] (35,350]12.2.1.4 Categorical defaults

Pass a character vector in the signature.

Call

match.arg()in the body.

The pattern for categorical defaults is:

function(cat_arg = c("choice1", "choice2")) {

cat_arg <- match.arg(cat_arg)

}When cutting up a numeric vector, you need to worry about what happens if a value lands exactly on a boundary. You can either put this value into a category of the lower interval or the higher interval. That is, you can choose your intervals to include values at the top boundary but not the bottom.

In mathematical terminology,

“open on the left, closed on the right” =

(lo, hi]“closed on the left, open on the right” =

[lo, hi)

cut_by_quantile() should allow these two choices.

# Take "rank()" for example, look at the ties.method argument

head(rank, 7)##

## 1 function (x, na.last = TRUE, ties.method = c("average", "first",

## 2 "last", "random", "max", "min"))

## 3 {

## 4 stopifnot(length(na.last) == 1L)

## 5 nas <- is.na(x)

## 6 nm <- names(x)

## 7 ties.method <- match.arg(ties.method)# Set the categories for interval_type to "(lo, hi]" and "[lo, hi)"

cut_by_quantile <- function(x, n = 5, na.rm = FALSE, labels = NULL,

interval_type = c("(lo, hi]", "[lo, hi)")) {

# Match the interval_type argument

interval_type <- match.arg(interval_type)

probs <- seq(0, 1, length.out = n + 1)

qtiles <- quantile(x, probs, na.rm = na.rm, names = FALSE)

right <- switch(interval_type, "(lo, hi]" = TRUE, "[lo, hi)" = FALSE)

cut(x, qtiles, labels = labels, right = right, include.lowest = TRUE)

}

# Remove the interval_type argument from the call

cut_by_quantile(n_visits)## [1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [9] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [17] [0,1] [0,1] [0,1] (10,35] (35,350] (10,35] [0,1] (2,10]

## [25] (1,2] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] (35,350]

## [33] (35,350] (35,350] (35,350] (35,350] (10,35] (35,350] (35,350] (35,350]

## [41] (35,350] (35,350] (2,10] (35,350] (35,350] (35,350] (2,10] (2,10]

## [49] (1,2] (10,35] (10,35] (10,35] (35,350] (35,350] (10,35] (10,35]

## [57] (35,350] (2,10] (35,350] (10,35] (2,10] (10,35] (35,350] (35,350]

## [65] (35,350] (35,350] (10,35] (10,35] (35,350] (10,35] [0,1] (35,350]

## [73] (10,35] (10,35] (2,10] (10,35] (10,35] (10,35] (2,10] (35,350]

## [81] (35,350] (35,350] (10,35] (10,35] (10,35] [0,1] (35,350] (2,10]

## [89] (10,35] (35,350] (10,35] (10,35] (35,350] (10,35] [0,1] [0,1]

## [97] (2,10] [0,1] (2,10] (2,10] (35,350] (2,10] (2,10] (2,10]

## [105] (10,35] (1,2] (10,35] (35,350] (2,10] (35,350] (2,10] (35,350]

## [113] (1,2] (35,350] (1,2] (35,350] (10,35] [0,1] [0,1] [0,1]

## [121] [0,1] (1,2] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [129] (2,10] [0,1] [0,1] (1,2] (1,2] [0,1] [0,1] (1,2]

## [137] [0,1] [0,1] [0,1] (1,2] (1,2] (2,10] (2,10] (2,10]

## [145] (2,10] (2,10] [0,1] [0,1] (1,2] [0,1] (1,2] (2,10]

## [153] [0,1] [0,1] [0,1] [0,1] (35,350] (10,35] (35,350] (10,35]

## [161] (2,10] (35,350] (2,10] [0,1] (10,35] (2,10] (10,35] (10,35]

## [169] (35,350] (10,35] (10,35] (35,350] (35,350] (10,35] (35,350] (10,35]

## [177] (10,35] (2,10] (35,350] (10,35] (10,35] (10,35] (35,350] (35,350]

## [185] [0,1] (10,35] (35,350] (10,35] (10,35] (2,10] (35,350] (10,35]

## [193] (35,350] (10,35] (35,350] (10,35] (35,350] (10,35] (35,350] [0,1]

## [201] (10,35] (35,350] (35,350] [0,1] [0,1] (2,10] (35,350] (2,10]

## [209] (1,2] (2,10] (10,35] (2,10] [0,1] (2,10] (35,350] (10,35]

## [217] (35,350] (10,35] (35,350] (10,35] (1,2] (10,35] (2,10] (35,350]

## [225] (2,10] (10,35] (10,35] (10,35] [0,1] (10,35] (10,35] (10,35]

## [233] (35,350] (10,35] (2,10] (2,10] [0,1] [0,1] [0,1] [0,1]

## [241] (2,10] (1,2] [0,1] [0,1] [0,1] (2,10] (10,35] [0,1]

## [249] [0,1] (2,10] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [257] [0,1] [0,1] [0,1] [0,1] (35,350] (2,10] [0,1] (35,350]

## [265] (2,10] (35,350] (2,10] (1,2] (35,350] (2,10] (2,10] (2,10]

## [273] (1,2] (10,35] (2,10] (10,35] (35,350] (2,10] (35,350] (35,350]

## [281] (2,10] (2,10] (35,350] (10,35] (2,10] (35,350] (10,35] (2,10]

## [289] (1,2] [0,1] (2,10] [0,1] [0,1] [0,1] [0,1] [0,1]

## [297] (1,2] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [305] [0,1] (1,2] [0,1] [0,1] [0,1] [0,1] (1,2] [0,1]

## [313] [0,1] (1,2] [0,1] [0,1] [0,1] [0,1] (1,2] [0,1]

## [321] [0,1] [0,1] [0,1] [0,1] (1,2] [0,1] [0,1] [0,1]

## [329] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [337] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [345] [0,1] (2,10] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [353] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] (1,2] [0,1]

## [361] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1]

## [369] [0,1] [0,1] (1,2] [0,1] [0,1] [0,1] (35,350] (10,35]

## [377] (35,350] (35,350] (35,350] (10,35] (35,350] (35,350] (35,350] (35,350]

## [385] (2,10] (2,10] (2,10] (10,35] (35,350] (10,35] (10,35] (10,35]

## [393] (10,35] (10,35] (10,35] (10,35] (35,350] (10,35] (35,350] (2,10]

## [401] (10,35] (1,2] (10,35] (35,350] (1,2] [0,1] (2,10] [0,1]

## [409] (2,10] (1,2]

## Levels: [0,1] (1,2] (2,10] (10,35] (35,350]match.arg() handles throwing an error if the user types a value that wasn’t specified.

12.2.2 Pass arguments between functions

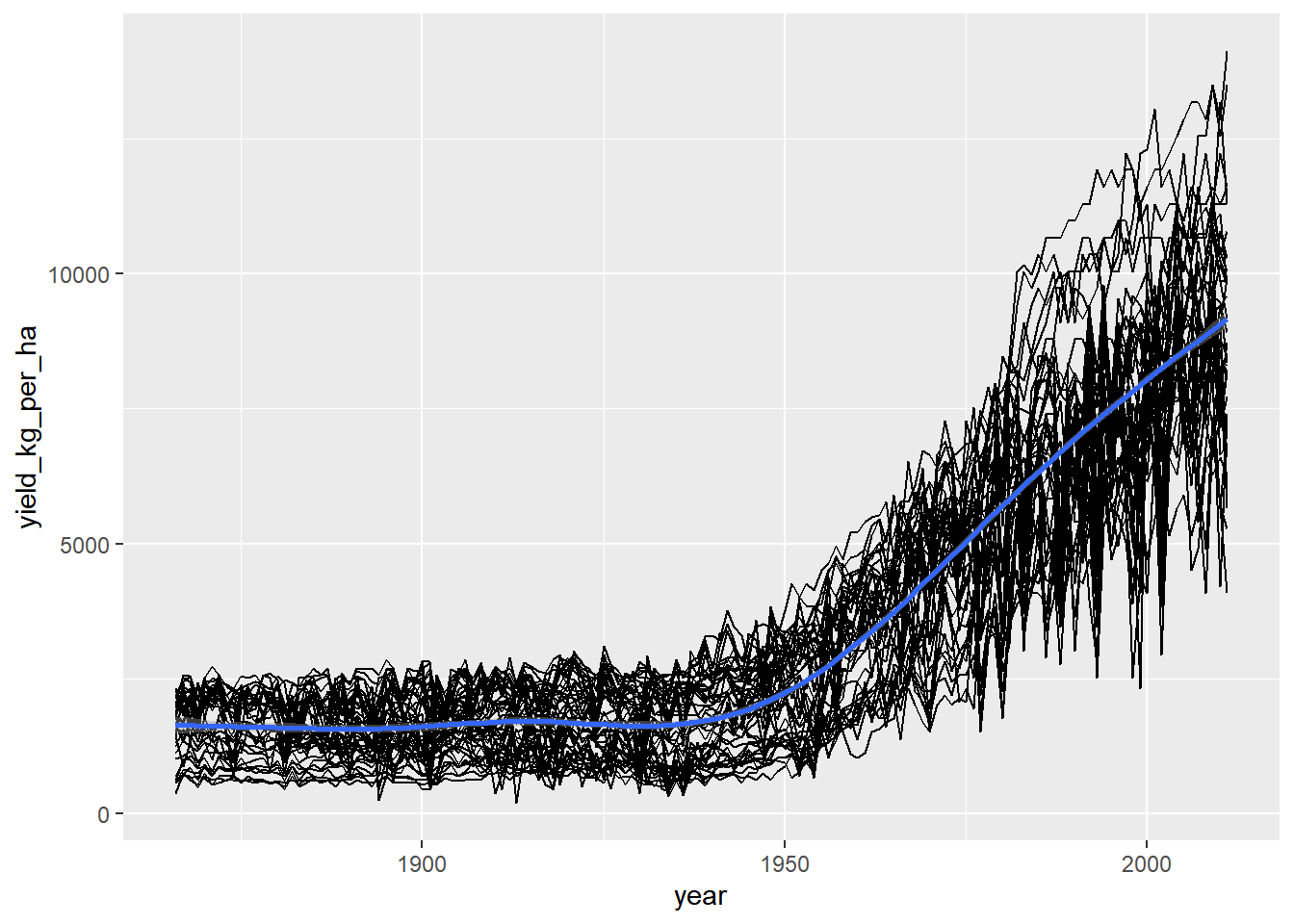

12.2.2.1 Harmonic mean

The harmonic mean is the reciprocal of the arithmetic mean of the reciprocal of the data. The harmonic mean is often used to average ratio data.

You’ll be using it on the price/earnings ratio of stocks in the Standard and Poor’s 500 index, provided as std_and_poor500. Price/earnings ratio is a measure of how expensive a stock is.

std_and_poor500 <- read_rds("data/std_and_poor500_with_pe_2019-06-21.rds")

glimpse(std_and_poor500)## Rows: 505

## Columns: 5

## $ symbol <chr> "MMM", "ABT", "ABBV", "ABMD", "ACN", "ATVI", "ADBE", "AMD", "…

## $ company <chr> "3M Company", "Abbott Laboratories", "AbbVie Inc.", "ABIOMED …

## $ sector <chr> "Industrials", "Health Care", "Health Care", "Health Care", "…

## $ industry <chr> "Industrial Conglomerates", "Health Care Equipment", "Pharmac…

## $ pe_ratio <dbl> 18.31678, 57.66621, 22.43805, 45.63993, 27.00233, 20.13596, 5…# Write a function to calculate the reciprocal

get_reciprocal <- function(x) {

1/x

}

# Write a function to calculate the harmonic mean

calc_harmonic_mean <- function(x) {

x %>%

get_reciprocal() %>%

mean() %>%

get_reciprocal()

}

# Calculate each sector Price/earnings ratio

std_and_poor500 %>%

# Group by sector

group_by(sector) %>%

# Summarize, calculating harmonic mean of P/E ratio

summarise(hmean_pe_ratio = calc_harmonic_mean(pe_ratio))## # A tibble: 11 × 2

## sector hmean_pe_ratio

## <chr> <dbl>

## 1 Communication Services NA

## 2 Consumer Discretionary NA

## 3 Consumer Staples NA

## 4 Energy NA

## 5 Financials NA

## 6 Health Care NA

## 7 Industrials NA

## 8 Information Technology NA

## 9 Materials NA

## 10 Real Estate 32.5

## 11 Utilities NAIt looks like we have a problem though: most sectors have missing values.

12.2.2.2 Handling missing values

It would be useful for your function to be able to remove missing values before calculating.

Rather than writing your own code for this, you can outsource this functionality to mean().

# Add an na.rm arg with a default, and pass it to mean()

calc_harmonic_mean <- function(x, na.rm = FALSE) {

x %>%

get_reciprocal() %>%

mean(na.rm = na.rm) %>%

get_reciprocal()

}

std_and_poor500 %>%

# Group by sector

group_by(sector) %>%

# Summarize, calculating harmonic mean of P/E ratio, and remove missing value

summarise(hmean_pe_ratio = calc_harmonic_mean(pe_ratio, na.rm = TRUE))## # A tibble: 11 × 2

## sector hmean_pe_ratio

## <chr> <dbl>

## 1 Communication Services 17.5

## 2 Consumer Discretionary 15.2

## 3 Consumer Staples 19.8

## 4 Energy 13.7

## 5 Financials 12.9

## 6 Health Care 26.6

## 7 Industrials 18.2

## 8 Information Technology 21.6

## 9 Materials 16.3

## 10 Real Estate 32.5

## 11 Utilities 23.912.2.2.3 Using ...

The tradeoff

Benefits

Less typing for you

No need to match signatures

Drawbacks

You need to trust the inner function

The interface is not as obvious to users

Rather than explicitly giving calc_harmonic_mean() and na.rm argument, you can use ... to simply “pass other arguments” to mean().

# Swap na.rm arg for ... in signature and body

calc_harmonic_mean <- function(x, ...) {

x %>%

get_reciprocal() %>%

mean(...) %>%

get_reciprocal()

}

std_and_poor500 %>%

# Group by sector

group_by(sector) %>%

# Summarize, calculating harmonic mean of P/E ratio, and remove missing value

summarise(hmean_pe_ratio = calc_harmonic_mean(pe_ratio, na.rm = TRUE))## # A tibble: 11 × 2

## sector hmean_pe_ratio

## <chr> <dbl>

## 1 Communication Services 17.5

## 2 Consumer Discretionary 15.2

## 3 Consumer Staples 19.8

## 4 Energy 13.7

## 5 Financials 12.9

## 6 Health Care 26.6

## 7 Industrials 18.2

## 8 Information Technology 21.6

## 9 Materials 16.3

## 10 Real Estate 32.5

## 11 Utilities 23.9Using ... doesn’t change how people use your function; it just means the function is more flexible.

12.2.3 Checking arguments

12.2.3.1 Throwing errors

If a user provides a bad input to a function, the best course of action is to throw an error letting them know. The two rules are

Throw the error message as soon as you realize there is a problem (typically at the start of the function).

Make the error message easily understandable.

You can use the assert() functions from assert package to check inputs and throw errors when they fail. (no output means no problem)

library(assert)

calc_harmonic_mean <- function(x, na.rm = FALSE) {

# Assert that x is numeric

assert(is.numeric(x))

x %>%

get_reciprocal() %>%

mean(na.rm = na.rm) %>%

get_reciprocal()

}

# See what happens when you pass it strings

calc_harmonic_mean(std_and_poor500$sector)Error: in calc_harmonic_mean(x = std_and_poor500$sector)

Failed checks:

is.numeric(x)12.2.3.2 Custom error logic

Sometimes the assert() functions in assert don’t give the most informative error message. For example, won’t say why that’s a problem. In that case, you can use the is_*() functions in conjunction with messages, warnings, or errors to define custom feedback.

The harmonic mean only makes sense when x has all positive values.

calc_harmonic_mean <- function(x, na.rm = FALSE) {

assert(is.numeric(x))

# Check if any values of x are non-positive

if(any(x < 0, na.rm = TRUE)) {

# Throw an error

stop("x contains non-positive values, so the harmonic mean makes no sense.")

}

x %>%

get_reciprocal() %>%

mean(na.rm = na.rm) %>%

get_reciprocal()

}

# See what happens when you pass it negative numbers

calc_harmonic_mean(std_and_poor500$pe_ratio - 20)Error in calc_harmonic_mean(std_and_poor500$pe_ratio - 20) :

x contains non-positive values, so the harmonic mean makes no sense.Explaining what went wrong is helpful to users. Explaining why it is wrong is even better!

12.2.3.3 Fixing function arguments

You still need to provide some checks on the na.rm argument. This time, rather than throwing errors when the input is in an incorrect form, you are going to try to fix it.

na.rm should be a logical vector with one element.

Fix the na.rm argument by using use_first() to select the first na.rm element, and coerce_to() to change it to logical.

library(assert)## Warning: package 'assert' was built under R version 4.3.1library(assertive.base)## Warning: package 'assertive.base' was built under R version 4.3.2# Update the function definition to fix the na.rm argument

calc_harmonic_mean <- function(x, na.rm = FALSE) {

assert(is.numeric(x))

if(any(x < 0, na.rm = TRUE)) {

stop("x contains non-positive values, so the harmonic mean makes no sense.")

}

# Use the first value of na.rm, and coerce to logical

na.rm <- coerce_to(use_first(na.rm), target_class = "logical")

x %>%

get_reciprocal() %>%

mean(na.rm = na.rm) %>%

get_reciprocal()

}

# See what happens when you pass it malformed na.rm

calc_harmonic_mean(std_and_poor500$pe_ratio, na.rm = 1:5)## Warning: Only the first value of na.rm (= 1) will be used.## Warning: Coercing use_first(na.rm) to class 'logical'.## [1] 18.2387112.3 Return Values and Scope

12.3.1 Returning values

Reasons for returning early

You already know the answer.

The input is an edge case.

12.3.1.1 Returning early

Sometimes, you don’t need to run through the whole body of a function to get the answer. In that case you can return early from that function using return().

Returning early can often improve the performance of your functions considerably for some input values.

You need to know that a leap year is every 400th year (like the year 2000) or every 4th year that isn’t a century (like 1904 but not 1900 or 1905).

# checking for the cases of year being divisible by 400, then 100, then 4, returning early from the function in each case.

is_leap_year <- function(year) {

# If year is div. by 400 return TRUE

if(year %% 400 == 0) {

return(TRUE)

}

# If year is div. by 100 return FALSE

if(year %% 100 == 0) {

return(FALSE)

}

# If year is div. by 4 return TRUE

if(year %% 4 == 0) {

return(TRUE)

}

# Otherwise return FALSE

else {

return(FALSE)

}

}12.3.1.2 Returning invisibly

When the main purpose of a function is to generate output, like drawing a plot or printing something in the console, you may not want a return value to be printed as well. In that case, the value should be invisibly returned: invisible()

The base R plot function returns NULL, since its main purpose is to draw a plot. Recall that plot() has a formula interface: plot(y ~ x, data = data)

# Using cars, draw a scatter plot of dist vs. speed

plt_dist_vs_speed <- base::plot(dist ~ speed, data = cars)

# Oh no! The plot object is NULL

plt_dist_vs_speedThis isn’t helpful if you want to use it in piped code: instead it should invisibly return the plot data to be piped on to the next step.

# Define a pipeable plot fn with data and formula args

pipeable_plot <- function(data, formula) {

# Call plot() with the formula interface

base::plot(formula, data)

# Invisibly return the input dataset

invisible(data)

}

# Draw the scatter plot of dist vs. speed again

plt_dist_vs_speed <- cars %>%

pipeable_plot(dist ~ speed)

# Now the plot object has a value

plt_dist_vs_speed12.3.2 Return multiple values

R functions can only return a single value, but there are two ways to get around this rule:

return several objects in a list

store objects as attributes

When to use each technique:

If you need the result to have a particular type, add additional return values as attributes.

Otherwise, collect all return values into a list

12.3.2.1 Returning many things

Functions can only return one value. If you want to return multiple things, then you can store them all in a list.

Multi-assignment

If users want to have the list items as separate variables, they can assign each list element to its own variable.

Using zeallot’s multi-assignment operator, %<-%, e.g, c(a, b) %<-% list(2 elements)

broom package:

glance(): modeltidy(): coefficientaugment(): observation

library(broom)

library(zeallot)## Warning: package 'zeallot' was built under R version 4.3.2# Look at the structure of model (it's a mess!)

str(model)## List of 31

## $ coefficients : Named num [1:7] 4.0864 0.374 -0.0199 -0.5807 -0.5782 ...

## ..- attr(*, "names")= chr [1:7] "(Intercept)" "genderfemale" "income($25k,$55k]" "income($55k,$95k]" ...

## $ residuals : Named num [1:346] -0.535 -0.768 -0.944 -0.662 -0.767 ...

## ..- attr(*, "names")= chr [1:346] "25" "26" "27" "29" ...

## $ fitted.values : Named num [1:346] 4.3 4.3 17.83 2.96 4.29 ...

## ..- attr(*, "names")= chr [1:346] "25" "26" "27" "29" ...

## $ effects : Named num [1:346] -360 -29.2 20.3 -10 23.4 ...

## ..- attr(*, "names")= chr [1:346] "(Intercept)" "genderfemale" "income($25k,$55k]" "income($55k,$95k]" ...

## $ R : num [1:7, 1:7] -97.4 0 0 0 0 ...

## ..- attr(*, "dimnames")=List of 2

## .. ..$ : chr [1:7] "(Intercept)" "genderfemale" "income($25k,$55k]" "income($55k,$95k]" ...

## .. ..$ : chr [1:7] "(Intercept)" "genderfemale" "income($25k,$55k]" "income($55k,$95k]" ...

## $ rank : int 7

## $ qr :List of 5

## ..$ qr : num [1:346, 1:7] -97.3861 0.0213 0.0434 0.0177 0.0213 ...

## .. ..- attr(*, "dimnames")=List of 2

## .. .. ..$ : chr [1:346] "25" "26" "27" "29" ...

## .. .. ..$ : chr [1:7] "(Intercept)" "genderfemale" "income($25k,$55k]" "income($55k,$95k]" ...

## ..$ rank : int 7

## ..$ qraux: num [1:7] 1.02 1.02 1.04 1.01 1 ...

## ..$ pivot: int [1:7] 1 2 3 4 5 6 7

## ..$ tol : num 1e-11

## ..- attr(*, "class")= chr "qr"

## $ family :List of 13

## ..$ family : chr "poisson"

## ..$ link : chr "log"

## ..$ linkfun :function (mu)

## ..$ linkinv :function (eta)

## ..$ variance :function (mu)

## ..$ dev.resids:function (y, mu, wt)

## ..$ aic :function (y, n, mu, wt, dev)

## ..$ mu.eta :function (eta)

## ..$ initialize: expression({ if (any(y < 0)) stop("negative values not allowed for the 'Poisson' family") n <- rep.int(1, nobs| __truncated__

## ..$ validmu :function (mu)

## ..$ valideta :function (eta)

## ..$ simulate :function (object, nsim)

## ..$ dispersion: num 1

## ..- attr(*, "class")= chr "family"

## $ linear.predictors: Named num [1:346] 1.46 1.46 2.88 1.09 1.46 ...

## ..- attr(*, "names")= chr [1:346] "25" "26" "27" "29" ...

## $ deviance : num 11529

## $ aic : num 12864

## $ null.deviance : num 18850

## $ iter : int 6

## $ weights : Named num [1:346] 4.3 4.3 17.83 2.96 4.29 ...

## ..- attr(*, "names")= chr [1:346] "25" "26" "27" "29" ...

## $ prior.weights : Named num [1:346] 1 1 1 1 1 1 1 1 1 1 ...

## ..- attr(*, "names")= chr [1:346] "25" "26" "27" "29" ...

## $ df.residual : int 339

## $ df.null : int 345

## $ y : Named num [1:346] 2 1 1 1 1 1 80 104 55 350 ...

## ..- attr(*, "names")= chr [1:346] "25" "26" "27" "29" ...

## $ converged : logi TRUE

## $ boundary : logi FALSE

## $ model :'data.frame': 346 obs. of 4 variables:

## ..$ n_visits: num [1:346] 2 1 1 1 1 1 80 104 55 350 ...

## ..$ gender : Factor w/ 2 levels "male","female": 2 2 1 1 2 1 2 2 1 2 ...

## ..$ income : Factor w/ 4 levels "[$0,$25k]","($25k,$55k]",..: 4 4 4 4 3 1 1 4 2 2 ...

## ..$ travel : Factor w/ 3 levels "[0h,0.25h]","(0.25h,4h]",..: 3 3 2 3 3 1 1 1 2 1 ...

## ..- attr(*, "terms")=Classes 'terms', 'formula' language n_visits ~ gender + income + travel

## .. .. ..- attr(*, "variables")= language list(n_visits, gender, income, travel)

## .. .. ..- attr(*, "factors")= int [1:4, 1:3] 0 1 0 0 0 0 1 0 0 0 ...

## .. .. .. ..- attr(*, "dimnames")=List of 2

## .. .. .. .. ..$ : chr [1:4] "n_visits" "gender" "income" "travel"

## .. .. .. .. ..$ : chr [1:3] "gender" "income" "travel"

## .. .. ..- attr(*, "term.labels")= chr [1:3] "gender" "income" "travel"

## .. .. ..- attr(*, "order")= int [1:3] 1 1 1

## .. .. ..- attr(*, "intercept")= int 1

## .. .. ..- attr(*, "response")= int 1

## .. .. ..- attr(*, ".Environment")=<environment: 0x0000029a03797748>

## .. .. ..- attr(*, "predvars")= language list(n_visits, gender, income, travel)

## .. .. ..- attr(*, "dataClasses")= Named chr [1:4] "numeric" "factor" "factor" "factor"

## .. .. .. ..- attr(*, "names")= chr [1:4] "n_visits" "gender" "income" "travel"

## ..- attr(*, "na.action")= 'omit' Named int [1:64] 1 2 3 4 5 6 7 8 9 10 ...

## .. ..- attr(*, "names")= chr [1:64] "1" "2" "3" "4" ...

## $ na.action : 'omit' Named int [1:64] 1 2 3 4 5 6 7 8 9 10 ...

## ..- attr(*, "names")= chr [1:64] "1" "2" "3" "4" ...

## $ call : language glm(formula = formula, family = poisson, data = data)

## $ formula :Class 'formula' language n_visits ~ gender + income + travel

## .. ..- attr(*, ".Environment")=<environment: 0x0000029a03797748>

## $ terms :Classes 'terms', 'formula' language n_visits ~ gender + income + travel

## .. ..- attr(*, "variables")= language list(n_visits, gender, income, travel)

## .. ..- attr(*, "factors")= int [1:4, 1:3] 0 1 0 0 0 0 1 0 0 0 ...

## .. .. ..- attr(*, "dimnames")=List of 2

## .. .. .. ..$ : chr [1:4] "n_visits" "gender" "income" "travel"

## .. .. .. ..$ : chr [1:3] "gender" "income" "travel"

## .. ..- attr(*, "term.labels")= chr [1:3] "gender" "income" "travel"

## .. ..- attr(*, "order")= int [1:3] 1 1 1

## .. ..- attr(*, "intercept")= int 1

## .. ..- attr(*, "response")= int 1

## .. ..- attr(*, ".Environment")=<environment: 0x0000029a03797748>

## .. ..- attr(*, "predvars")= language list(n_visits, gender, income, travel)

## .. ..- attr(*, "dataClasses")= Named chr [1:4] "numeric" "factor" "factor" "factor"

## .. .. ..- attr(*, "names")= chr [1:4] "n_visits" "gender" "income" "travel"

## $ data :'data.frame': 410 obs. of 4 variables:

## ..$ n_visits: num [1:410] 0 0 0 0 0 0 0 0 0 0 ...

## ..$ gender : Factor w/ 2 levels "male","female": 1 1 1 2 1 2 2 2 1 1 ...

## ..$ income : Factor w/ 4 levels "[$0,$25k]","($25k,$55k]",..: 4 2 4 2 4 2 4 4 4 4 ...

## ..$ travel : Factor w/ 3 levels "[0h,0.25h]","(0.25h,4h]",..: NA NA NA NA NA NA NA NA NA NA ...

## $ offset : NULL

## $ control :List of 3

## ..$ epsilon: num 1e-08

## ..$ maxit : num 25

## ..$ trace : logi FALSE

## $ method : chr "glm.fit"

## $ contrasts :List of 3

## ..$ gender: chr "contr.treatment"

## ..$ income: chr "contr.treatment"

## ..$ travel: chr "contr.treatment"

## $ xlevels :List of 3

## ..$ gender: chr [1:2] "male" "female"

## ..$ income: chr [1:4] "[$0,$25k]" "($25k,$55k]" "($55k,$95k]" "($95k,$Inf)"

## ..$ travel: chr [1:3] "[0h,0.25h]" "(0.25h,4h]" "(4h,Infh)"

## - attr(*, "class")= chr [1:2] "glm" "lm"# Use broom tools to get a list of 3 data frames

list(

# Get model-level values

model = glance(model),

# Get coefficient-level values

coefficients = tidy(model),

# Get observation-level values

observations = augment(model)

)## $model

## # A tibble: 1 × 8

## null.deviance df.null logLik AIC BIC deviance df.residual nobs

## <dbl> <int> <dbl> <dbl> <dbl> <dbl> <int> <int>

## 1 18850. 345 -6425. 12864. 12891. 11529. 339 346

##

## $coefficients

## # A tibble: 7 × 5

## term estimate std.error statistic p.value

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 (Intercept) 4.09 0.0279 146. 0

## 2 genderfemale 0.374 0.0212 17.6 2.18e- 69

## 3 income($25k,$55k] -0.0199 0.0267 -0.746 4.56e- 1

## 4 income($55k,$95k] -0.581 0.0343 -16.9 3.28e- 64

## 5 income($95k,$Inf) -0.578 0.0310 -18.7 6.88e- 78

## 6 travel(0.25h,4h] -0.627 0.0217 -28.8 5.40e-183

## 7 travel(4h,Infh) -2.42 0.0492 -49.3 0

##

## $observations

## # A tibble: 346 × 11

## .rownames n_visits gender income travel .fitted .resid .hat .sigma

## <chr> <dbl> <fct> <fct> <fct> <dbl> <dbl> <dbl> <dbl>

## 1 25 2 female ($95k,$Inf) (4h,Inf… 1.46 -1.24 0.0109 5.84

## 2 26 1 female ($95k,$Inf) (4h,Inf… 1.46 -1.92 0.0109 5.84

## 3 27 1 male ($95k,$Inf) (0.25h,… 2.88 -5.28 0.0129 5.83

## 4 29 1 male ($95k,$Inf) (4h,Inf… 1.09 -1.32 0.00711 5.84

## 5 30 1 female ($55k,$95k] (4h,Inf… 1.46 -1.92 0.0121 5.84

## 6 31 1 male [$0,$25k] [0h,0.2… 4.09 -10.4 0.0465 5.81

## 7 33 80 female [$0,$25k] [0h,0.2… 4.46 -0.710 0.0479 5.84

## 8 34 104 female ($95k,$Inf) [0h,0.2… 3.88 6.90 0.0332 5.83

## 9 35 55 male ($25k,$55k] (0.25h,… 3.44 3.85 0.0153 5.84

## 10 36 350 female ($25k,$55k] [0h,0.2… 4.44 21.5 0.0360 5.72

## # ℹ 336 more rows

## # ℹ 2 more variables: .cooksd <dbl>, .std.resid <dbl># Wrap this code into a function, groom_model

groom_model <- function(model) {

list(

model = glance(model),

coefficients = tidy(model),

observations = augment(model)

)

}

# Call groom_model on model, assigning to 3 variables

c(mdl, cff, obs) %<-% groom_model(model)

# See these individual variables

mdl; cff; obs## # A tibble: 1 × 8

## null.deviance df.null logLik AIC BIC deviance df.residual nobs

## <dbl> <int> <dbl> <dbl> <dbl> <dbl> <int> <int>

## 1 18850. 345 -6425. 12864. 12891. 11529. 339 346## # A tibble: 7 × 5

## term estimate std.error statistic p.value

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 (Intercept) 4.09 0.0279 146. 0

## 2 genderfemale 0.374 0.0212 17.6 2.18e- 69

## 3 income($25k,$55k] -0.0199 0.0267 -0.746 4.56e- 1

## 4 income($55k,$95k] -0.581 0.0343 -16.9 3.28e- 64

## 5 income($95k,$Inf) -0.578 0.0310 -18.7 6.88e- 78

## 6 travel(0.25h,4h] -0.627 0.0217 -28.8 5.40e-183

## 7 travel(4h,Infh) -2.42 0.0492 -49.3 0## # A tibble: 346 × 11

## .rownames n_visits gender income travel .fitted .resid .hat .sigma

## <chr> <dbl> <fct> <fct> <fct> <dbl> <dbl> <dbl> <dbl>

## 1 25 2 female ($95k,$Inf) (4h,Inf… 1.46 -1.24 0.0109 5.84

## 2 26 1 female ($95k,$Inf) (4h,Inf… 1.46 -1.92 0.0109 5.84

## 3 27 1 male ($95k,$Inf) (0.25h,… 2.88 -5.28 0.0129 5.83

## 4 29 1 male ($95k,$Inf) (4h,Inf… 1.09 -1.32 0.00711 5.84

## 5 30 1 female ($55k,$95k] (4h,Inf… 1.46 -1.92 0.0121 5.84

## 6 31 1 male [$0,$25k] [0h,0.2… 4.09 -10.4 0.0465 5.81

## 7 33 80 female [$0,$25k] [0h,0.2… 4.46 -0.710 0.0479 5.84

## 8 34 104 female ($95k,$Inf) [0h,0.2… 3.88 6.90 0.0332 5.83

## 9 35 55 male ($25k,$55k] (0.25h,… 3.44 3.85 0.0153 5.84

## 10 36 350 female ($25k,$55k] [0h,0.2… 4.44 21.5 0.0360 5.72

## # ℹ 336 more rows

## # ℹ 2 more variables: .cooksd <dbl>, .std.resid <dbl>12.3.2.2 Returning metadata

Sometimes you want to return multiple things from a function, but you want the result to have a particular class (for example, a data frame or a numeric vector), so returning a list isn’t appropriate.

This is common when you have a result plus metadata about the result. (Metadata is “data about the data”. For example, it could be the file a dataset was loaded from)

In that case, you can store the metadata in attributes.

attr(object, "attribute_name") <- attribute_valuepipeable_plot <- function(data, formula) {

plot(formula, data)

# Add a "formula" attribute to data

attr(data, "formula") <- formula

invisible(data)

}

# From previous exercise

plt_dist_vs_speed <- cars %>%

pipeable_plot(dist ~ speed)

# Examine the structure of the result

str(plt_dist_vs_speed)12.3.3 Environments

Environments are a type of variable that is used to store other variables. Mostly, you can think of them as lists.

Every environment has a parent environment (except the empty environment, at the root of the environment tree). This determines which variables R know about at different places in your code.

12.3.3.1 Create & explore environments

ls.str(list): list the elements, give a compact summary of multiple variables at once.list2env(list): convert the list to an environment.environmentName(parent.env(env)): find the parent of an environment, and display it.

Facts about the Republic of South Africa are contained in capitals, national_parks, and population.

# create capitals

city <- c("Cape Town", "Bloemfontein", "Pretoria")

type_of_capital <- c("Legislative", "Judicial", "Administrative")

capitals <- data.frame(city, type_of_capital)

# create national_parks

national_parks <- c(

"Addo Elephant National Park",

"Agulhas National Park",

"Ai-Ais/Richtersveld Transfrontier Park",

"Augrabies Falls National Park",

"Bontebok National Park",

"Camdeboo National Park",

"Golden Gate Highlands National Park",

"Hluhluwe–Imfolozi Park",

"Karoo National Park",

"Kgalagadi Transfrontier Park",

"Knysna National Lake Area",

"Kruger National Park",

"Mapungubwe National Park",

"Marakele National Park",

"Mokala National Park",

"Mountain Zebra National Park",

"Namaqua National Park",

"Table Mountain National Park",

"Tankwa Karoo National Park",

"Tsitsikamma National Park",

"West Coast National Park",

"Wilderness National Park")

# create population

population <- ts(c(40583573, 44819778, 47390900, 51770560, 55908900), start = 1996, end = 2016, frequency = 0.2); population## Time Series:

## Start = 1996

## End = 2016

## Frequency = 0.2

## [1] 40583573 44819778 47390900 51770560 55908900# Add capitals, national_parks, & population to a named list

rsa_lst <- list(

capitals = capitals,

national_parks = national_parks,

population = population

)

# List the structure of each element of rsa_lst

ls.str(rsa_lst)## capitals : 'data.frame': 3 obs. of 2 variables:

## $ city : chr "Cape Town" "Bloemfontein" "Pretoria"

## $ type_of_capital: chr "Legislative" "Judicial" "Administrative"

## national_parks : chr [1:22] "Addo Elephant National Park" "Agulhas National Park" ...

## population : Time-Series [1:5] from 1996 to 2016: 40583573 44819778 47390900 51770560 55908900# Convert the list to an environment

rsa_env <- list2env(rsa_lst)

# List the structure of each variable

ls.str(rsa_env)## capitals : 'data.frame': 3 obs. of 2 variables:

## $ city : chr "Cape Town" "Bloemfontein" "Pretoria"

## $ type_of_capital: chr "Legislative" "Judicial" "Administrative"

## national_parks : chr [1:22] "Addo Elephant National Park" "Agulhas National Park" ...

## population : Time-Series [1:5] from 1996 to 2016: 40583573 44819778 47390900 51770560 55908900# Find the parent environment of rsa_env

parent <- parent.env(rsa_env)

# Print its name

environmentName(parent)## [1] "R_GlobalEnv"12.3.3.2 Do variables exist?

If R cannot find a variable in the current environment, it will look in the parent environment, then the grandparent environment, and so on until it finds it.

exists("variable", envir = env)

You can force R to only look in the environment you asked for by setting inherits to FALSE.

exists("variable", envir = env, inherits = FALSE)

# Compare the contents of the global environment and rsa_env

ls.str(globalenv())## a : num 5

## account_offices : spc_tbl_ [98 × 2] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## accounts : tibble [97 × 5] (S3: tbl_df/tbl/data.frame)

## accounts_clean : tibble [88 × 6] (S3: tbl_df/tbl/data.frame)

## airquality : spc_tbl_ [153 × 7] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## akl_daily : tibble [3,661 × 10] (S3: tbl_df/tbl/data.frame)

## akl_daily_raw : spc_tbl_ [3,661 × 7] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## akl_day : tibble [10,905 × 15] (S3: tbl_df/tbl/data.frame)

## akl_hourly : tibble [17,454 × 13] (S3: tbl_df/tbl/data.frame)

## akl_hourly_raw : spc_tbl_ [17,454 × 10] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## all_deals : 'data.frame': 10548 obs. of 2 variables:

## $ product : int 3544 5073 6149 7863 14 10247 3544 5073 6149 7863 ...

## $ num_users: int 19 43 87 83 17 2 29 13 80 23 ...

## all_wars_matrix : num [1:6, 1:2] 461 290 309 474 311 ...

## amir_deals : 'data.frame': 178 obs. of 5 variables:

## $ product : Factor w/ 14 levels "Product A","Product B",..: 6 3 2 9 5 2 3 13 6 2 ...

## $ client : Factor w/ 3 levels "Current","New",..: 1 2 2 1 1 2 1 1 1 1 ...

## $ status : Factor w/ 2 levels "Lost","Won": 2 2 2 2 2 2 2 2 2 2 ...

## $ amount : num 7390 4493 5738 2591 6623 ...

## $ num_users: num 19 43 87 83 17 2 29 13 80 23 ...

## animals_vector : chr [1:4] "Elephant" "Giraffe" "Donkey" "Horse"

## answer_counts : spc_tbl_ [227,452 × 2] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## answers : spc_tbl_ [451,118 × 4] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## answers_with_tags : spc_tbl_ [800,378 × 6] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## april_10_2014 : Date[1:1], format: "2014-04-10"

## astro : Named chr [1:4] "20-Mar-2015" "25-Jun-2015" "23-Sep-2015" "22-Dec-2015"

## astro_dates : Date[1:4], format: "2015-03-20" "2015-06-25" "2015-09-23" "2015-12-22"

## avg_review : num 4.72

## avg_sum : num 22.3

## avg_sum_trimmed : num 22.6

## awards : chr [1:6] "Won 1 Oscar." "Won 1 Oscar. Another 9 wins & 24 nominations." ...

## babynames_fraction : tibble [1,924,665 × 7] (S3: tbl_df/tbl/data.frame)

## babynames_ratios_filtered : gropd_df [541,903 × 8] (S3: grouped_df/tbl_df/tbl/data.frame)

## basics : function (x)

## batman : tibble [10,111 × 13] (S3: tbl_df/tbl/data.frame)

## batman_colors : tibble [56 × 3] (S3: tbl_df/tbl/data.frame)

## batman_parts : tibble [3,455 × 3] (S3: tbl_df/tbl/data.frame)

## batmobile : tibble [173 × 5] (S3: tbl_df/tbl/data.frame)

## batwing : tibble [323 × 5] (S3: tbl_df/tbl/data.frame)

## below_zero : function (x)

## bike_share_rides : spc_tbl_ [35,231 × 6] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## bike_share_rides_past : tibble [34,025 × 10] (S3: tbl_df/tbl/data.frame)

## bike_share_rides_unique : tibble [35,229 × 6] (S3: tbl_df/tbl/data.frame)

## blue_range : function (n)

## blues : chr [1:9] "#F7FBFF" "#DEEBF7" "#C6DBEF" "#9ECAE1" "#6BAED6" "#4292C6" ...

## box_office : num [1:6] 461 314 290 248 309 ...

## breaks : num [1:4] 2.27 0 1440 1668

## by_continent : tibble [5 × 2] (S3: tbl_df/tbl/data.frame)

## by_continent_2007 : tibble [5 × 3] (S3: tbl_df/tbl/data.frame)

## by_type_year_tag : tibble [57,331 × 4] (S3: tbl_df/tbl/data.frame)

## by_type_year_tag_filtered : tibble [38 × 4] (S3: tbl_df/tbl/data.frame)

## by_year : tibble [12 × 2] (S3: tbl_df/tbl/data.frame)

## by_year_continent : gropd_df [60 × 3] (S3: grouped_df/tbl_df/tbl/data.frame)

## c : int 3

## calc_harmonic_mean : function (x, na.rm = FALSE)

## capitals : 'data.frame': 3 obs. of 2 variables:

## $ city : chr "Cape Town" "Bloemfontein" "Pretoria"

## $ type_of_capital: chr "Legislative" "Judicial" "Administrative"

## cff : tibble [7 × 5] (S3: tbl_df/tbl/data.frame)

## char : chr "u"

## chars : chr [1:40] "r" "'" "s" " " "i" "n" "t" "e" "r" "n" "a" "l" "s" " " "a" "r" ...

## cities : 'data.frame': 5 obs. of 1 variable:

## $ city_actual: chr "new york" "los angeles" "atlanta" "san francisco" ...

## city : chr [1:3] "Cape Town" "Bloemfontein" "Pretoria"

## coin_sides : chr [1:2] "head" "tail"

## color_palette : Named chr [1:42] "#05131D" "#0055BF" "#237841" "#C91A09" "#F2CD37" ...

## colors : spc_tbl_ [262 × 4] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## colors_joined : tibble [42 × 10] (S3: tbl_df/tbl/data.frame)

## cols : chr [1:8] "country" "year_1960" "year_1961" "year_1962" "year_1963" ...

## comments : chr [1:5] "I would watch it again" "Amazing!" "I liked it" ...

## counties : spc_tbl_ [3,138 × 40] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## counties_selected : tibble [3,138 × 5] (S3: tbl_df/tbl/data.frame)

## countries : 'data.frame': 209 obs. of 1 variable:

## $ country: chr "Afghanistan" "Albania" "Algeria" "American Samoa" ...

## cut_by_quantile : function (x, n = 5, na.rm = FALSE, labels = NULL, interval_type = c("(lo, hi]",

## "[lo, hi)"))

## da2 : Ord.factor w/ 3 levels "slow"<"medium"<..: 1

## da5 : Ord.factor w/ 3 levels "slow"<"medium"<..: 3

## datacamp_light_blue : chr "#51A8C9"

## date_difference : Formal class 'Interval' [package "lubridate"] with 3 slots

## date_landing : Date[1:1], format: "1969-07-20"

## date_stamp : function (x, locale = "C")

## date1 : Date[1:1], format: "1996-05-23"

## date2 : Date[1:1], format: "2012-03-15"

## date3 : Date[1:1], format: "2006-01-30"

## dates : chr [1:17454] "2016-01-01T00:00:00Z" "2016-01-01T00:30:00Z" ...

## day_diff : 'difftime' num [1:4] 2 5 6 5

## day1 : Date[1:1], format: "2023-09-15"

## day2 : Date[1:1], format: "2023-09-17"

## day3 : Date[1:1], format: "2023-09-22"

## day4 : Date[1:1], format: "2023-09-28"

## day5 : Date[1:1], format: "2023-10-03"

## days_vector : chr [1:5] "Monday" "Tuesday" "Wednesday" "Thursday" "Friday"

## deals : int [1:52] 1 1 0 0 0 0 1 1 1 1 ...

## departed_list : List of 4

## $ title : chr "The Departed"

## $ actors : chr [1:6] "Leonardo DiCaprio" "Matt Damon" "Jack Nicholson" "Mark Wahlberg" ...

## $ reviews:'data.frame': 5 obs. of 2 variables:

## $ average: num 4.72

## dest_size : chr [1:4] "Small" "Medium" "Large" "Hub"

## dest_sizes : 'data.frame': 4 obs. of 2 variables:

## $ dest_size : chr "Small" "Medium" "Large" "Hub"

## $ passengers_per_day: chr "0-20K" "20K-70K" "70K-100K" "100K+"

## diameter : num [1:8] 0.382 0.949 1 0.532 11.209 ...

## dims : int [1:2, 1:3] 209 8 209 9 209 38

## eclipse_2017 : POSIXct[1:1], format: "2017-08-21 18:26:40"

## emails : chr [1:6] "john.doe@ivyleague.edu" "education@world.gov" ...

## emissions_by_country : tibble [130 × 2] (S3: tbl_df/tbl/data.frame)

## empire_strikes : num [1:2] 290 248

## errors : num [1:6] 1.9 -2.6 4 -9.5 -3.4 7.3

## europe_categories : chr [1:3] "EU" "eur" "Europ"

## every_two_weeks : Formal class 'Period' [package "lubridate"] with 6 slots

## expected_val : num 2.9

## extremes : function (x)

## extremes_avg : function (x)

## fac : List of 3

## $ levels : chr [1:3] "Beef" "Meat" "Poultry"

## $ ordered : logi FALSE

## $ include_na: logi FALSE

## facebook : List of 7

## $ : num 17

## $ : num 7

## $ : num 5

## $ : num 16

## $ : num 8

## $ : num 13

## $ : num 14

## factor_animals_vector : Factor w/ 4 levels "Donkey","Elephant",..: 2 3 1 4

## factor_sex_vector : Factor w/ 2 levels "Female","Male": 2 1 1 2 2

## factor_speed_vector : Ord.factor w/ 3 levels "slow"<"medium"<..: 2 1 1 2 3

## factor_survey_vector : Factor w/ 2 levels "Female","Male": 2 1 1 2 2

## factor_temperature_vector : Ord.factor w/ 3 levels "Low"<"Medium"<..: 3 1 3 1 2

## fb : num 9

## fb_vec : num [1:7] 17 7 5 16 8 13 14

## finished : chr "I finished 'Dates and Times in R' on Thursday, September 4, 2017!"

## fish : chr [1:3] "fish.species" "fish.year" "fish.tidy"

## fish.species : 'data.frame': 61 obs. of 8 variables:

## $ Year : int 1950 1951 1952 1953 1954 1955 1956 1957 1958 1959 ...

## $ Pink : int 100600 259000 132600 235900 123400 244400 203400 270119 200798 200085 ...

## $ Chum : int 139300 155900 113800 99800 148700 143700 158480 125377 132407 113114 ...

## $ Sockeye : int 64100 51200 58200 66100 83800 72000 84800 69676 100520 62472 ...

## $ Coho : int 30500 40900 33600 32400 38300 45100 40000 39900 39200 32865 ...

## $ Rainbow : int 0 100 100 100 100 100 100 100 100 100 ...

## $ Chinook : int 23200 25500 24900 25300 24500 27700 25300 21200 20900 20335 ...

## $ Atlantic: int 10800 9701 9800 8800 9600 7800 8100 9000 8801 8700 ...

## fish.tidy : 'data.frame': 427 obs. of 3 variables:

## $ Species: Factor w/ 7 levels "Pink","Chum",..: 1 1 1 1 1 1 1 1 1 1 ...

## $ Year : int 1950 1951 1952 1953 1954 1955 1956 1957 1958 1959 ...

## $ Capture: int 100600 259000 132600 235900 123400 244400 203400 270119 200798 200085 ...

## fish.year : 'data.frame': 7 obs. of 62 variables:

## $ Species: Factor w/ 7 levels "Pink","Chum",..: 1 2 3 4 5 6 7

## $ 1950 : int 100600 139300 64100 30500 0 23200 10800

## $ 1951 : int 259000 155900 51200 40900 100 25500 9701

## $ 1952 : int 132600 113800 58200 33600 100 24900 9800

## $ 1953 : int 235900 99800 66100 32400 100 25300 8800

## $ 1954 : int 123400 148700 83800 38300 100 24500 9600

## $ 1955 : int 244400 143700 72000 45100 100 27700 7800

## $ 1956 : int 203400 158480 84800 40000 100 25300 8100

## $ 1957 : int 270119 125377 69676 39900 100 21200 9000

## $ 1958 : int 200798 132407 100520 39200 100 20900 8801

## $ 1959 : int 200085 113114 62472 32865 100 20335 8700

## $ 1960 : int 111880 120760 83171 26898 100 17412 8900

## $ 1961 : int 178133 109053 93311 37524 200 18130 9400

## $ 1962 : int 163558 110707 66126 39883 300 17489 11500

## $ 1963 : int 222477 106491 53140 41547 1600 18928 10766

## $ 1964 : int 143623 94498 56189 38948 2300 20932 14342

## $ 1965 : int 161131 76503 94286 44207 1500 21197 12363

## $ 1966 : int 174844 93949 81080 46874 900 21549 12337

## $ 1967 : int 168960 88027 75582 43396 1700 21375 14411

## $ 1968 : int 155729 95624 113332 46463 11200 20720 12823

## $ 1969 : int 195169 67888 65106 30973 3000 21915 13316

## $ 1970 : int 133466 113650 106436 44050 4400 24000 13457

## $ 1971 : int 178823 105879 79160 43110 4600 25800 12397

## $ 1972 : int 93852 137655 43932 33880 4900 24000 12665

## $ 1973 : int 151180 125364 55637 38550 6900 27400 15387

## $ 1974 : int 94026 121052 53027 42631 8099 24148 15158

## $ 1975 : int 171012 127561 38615 31782 10302 24137 14736

## $ 1976 : int 146895 125110 60357 36965 9492 24984 11372

## $ 1977 : int 224475 118677 64651 30777 6166 26351 11491

## $ 1978 : int 174314 129513 75737 30712 7219 25417 8948

## $ 1979 : int 249558 150176 109439 34279 7036 25452 10259

## $ 1980 : int 226191 166803 111830 30557 9027 23023 12965

## $ 1981 : int 264855 186550 132651 28702 8819 22789 12425

## $ 1982 : int 170373 182561 128176 42281 6839 25147 10326

## $ 1983 : int 255129 196414 163790 30291 7473 18926 10862

## $ 1984 : int 210511 210507 126834 40834 7977 18449 10562

## $ 1985 : int 300987 267794 150860 38823 9373 20318 12422

## $ 1986 : int 211685 239065 136452 42825 9709 21187 11739

## $ 1987 : int 218121 217129 131135 28173 12196 25475 11413

## $ 1988 : int 165236 286572 107361 32245 11872 31338 10093

## $ 1989 : int 363456 243728 169410 31614 12249 20725 15795

## $ 1990 : int 235190 299438 198168 34635 12529 18249 10860

## $ 1991 : int 438998 266940 161218 38078 12545 15373 9793

## $ 1992 : int 216110 238427 199709 38433 9731 15093 9308

## $ 1993 : int 302568 286996 242613 25451 19091 15036 8161

## $ 1994 : int 326065 328765 183686 45602 12624 13623 7231

## $ 1995 : int 394735 424595 189612 28922 13450 13801 6819

## $ 1996 : int 294915 411395 188584 28201 12407 10509 6493

## $ 1997 : int 318717 347560 132075 13191 13147 13000 5579

## $ 1998 : int 371552 311965 78972 19386 18940 9840 4744

## $ 1999 : int 386928 281260 130128 15449 15874 8735 4107

## $ 2000 : int 285338 276355 124782 18035 14918 8437 4710

## $ 2001 : int 360973 307662 108618 20006 14927 8771 4772

## $ 2002 : int 268544 314098 103325 20757 12241 13911 4139

## $ 2003 : int 377749 360429 109822 16995 17128 15046 3648

## $ 2004 : int 266554 351188 142385 24546 16601 15899 4082

## $ 2005 : int 456350 318389 147151 18791 16886 13571 3727

## $ 2006 : int 316205 361561 151523 18275 17079 10497 3087

## $ 2007 : int 506343 331266 164609 17228 14844 8900 3014

## $ 2008 : int 294876 295819 138896 21280 16819 6443 3002

## $ 2009 : int 591654 359908 150040 19725 17884 6303 2373

## $ 2010 : int 370044 143959 170972 20770 20754 7968 2363

## fodors : 'data.frame': 533 obs. of 7 variables:

## $ id : int 0 1 2 3 4 5 6 7 8 9 ...

## $ name : chr "arnie morton's of chicago" "art's delicatessen" "hotel bel-air" "cafe bizou" ...

## $ addr : chr "435 s. la cienega blv ." "12224 ventura blvd." "701 stone canyon rd." "14016 ventura blvd." ...

## $ city : Factor w/ 5 levels "atlanta","los angeles",..: 2 2 2 2 2 2 2 2 2 2 ...

## $ phone: chr "310-246-1501" "818-762-1221" "310-472-1211" "818-788-3536" ...

## $ type : chr "american" "american" "californian" "french" ...

## $ class: int 0 1 2 3 4 5 6 7 8 9 ...

## food_consumption : spc_tbl_ [1,430 × 4] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## formats : chr [1:2] "%Y-%m-%d" "%B %d, %Y"

## freezing_l : List of 7

## $ : num -1

## $ : num(0)

## $ : num [1:2] -1 -3

## $ : num -2

## $ : num(0)

## $ : num -3

## $ : num(0)

## freezing_s : List of 7

## $ : num -1

## $ : num(0)

## $ : num [1:2] -1 -3

## $ : num -2

## $ : num(0)

## $ : num -3

## $ : num(0)

## game2 : POSIXct[1:1], format: "2015-06-11 19:00:00"

## game2_local : POSIXct[1:1], format: "2015-06-11 19:00:00"

## game3 : POSIXct[1:1], format: "2015-06-15 18:30:00"

## game3_local : POSIXct[1:1], format: "2015-06-15 18:30:00"

## gapminder_1952 : tibble [142 × 6] (S3: tbl_df/tbl/data.frame)

## get_reciprocal : function (x)

## global_mean : num 67

## gm2007 : spc_tbl_ [20 × 3] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## gm2007_full : spc_tbl_ [142 × 3] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## groom_model : function (model)

## group_id : chr [1:10] "A" "B" "C" "D" "E" "F" "G" "H" "I" "J"

## group_size : num [1:10] 2 4 6 2 2 2 3 2 4 2

## growth_by_dose : List of 9

## $ data : spc_tbl_ [60 × 3] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## $ layers :List of 2

## $ scales :Classes 'ScalesList', 'ggproto', 'gg' <ggproto object: Class ScalesList, gg>

## add: function

## clone: function

## find: function

## get_scales: function

## has_scale: function

## input: function

## n: function

## non_position_scales: function

## scales: list

## super: <ggproto object: Class ScalesList, gg>

## $ mapping :List of 3

## $ theme :List of 97

## $ coordinates:Classes 'CoordCartesian', 'Coord', 'ggproto', 'gg' <ggproto object: Class CoordCartesian, Coord, gg>

## aspect: function

## backtransform_range: function

## clip: on

## default: TRUE

## distance: function

## expand: TRUE

## is_free: function

## is_linear: function

## labels: function

## limits: list

## modify_scales: function

## range: function

## render_axis_h: function

## render_axis_v: function

## render_bg: function

## render_fg: function

## setup_data: function

## setup_layout: function

## setup_panel_guides: function

## setup_panel_params: function

## setup_params: function

## train_panel_guides: function

## transform: function

## super: <ggproto object: Class CoordCartesian, Coord, gg>

## $ facet :Classes 'FacetNull', 'Facet', 'ggproto', 'gg' <ggproto object: Class FacetNull, Facet, gg>

## compute_layout: function

## draw_back: function

## draw_front: function

## draw_labels: function

## draw_panels: function

## finish_data: function

## init_scales: function

## map_data: function

## params: list

## setup_data: function

## setup_params: function

## shrink: TRUE

## train_scales: function

## vars: function

## super: <ggproto object: Class FacetNull, Facet, gg>

## $ plot_env :<environment: R_GlobalEnv>

## $ labels :List of 3

## halleys : tibble [27 × 7] (S3: tbl_df/tbl/data.frame)

## halleys_1066 : tibble [1 × 7] (S3: tbl_df/tbl/data.frame)

## hello : function ()

## hits : int [1:2] 1 5

## hits_w : int [1:4] 1 2 4 5

## hotdogs : 'data.frame': 54 obs. of 3 variables:

## $ type : chr "Beef" "Beef" "Beef" "Beef" ...

## $ calories: int 186 181 176 149 184 190 158 139 175 148 ...

## $ sodium : int 495 477 425 322 482 587 370 322 479 375 ...

## hotdogs_factor : spc_tbl_ [54 × 3] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## hotdogs2 : 'data.frame': 54 obs. of 2 variables:

## $ type : Factor w/ 3 levels "Beef","Meat",..: 1 1 1 1 1 1 1 1 1 1 ...

## $ sodium: num 495 477 425 322 482 587 370 322 479 375 ...

## i : int 6

## index2 : int 7

## int : list()

## interpret : function (num_views)

## interpret_all : function (views, return_sum = T)

## inventories : spc_tbl_ [37,233 × 3] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## inventory_parts : spc_tbl_ [1,179,869 × 6] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## inventory_parts_joined : tibble [1,179,869 × 6] (S3: tbl_df/tbl/data.frame)

## inventory_parts_themes : tibble [1,113,729 × 13] (S3: tbl_df/tbl/data.frame)

## inventory_sets_themes : tibble [1,113,729 × 13] (S3: tbl_df/tbl/data.frame)

## inventory_version_1 : spc_tbl_ [35,612 × 3] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## iqr : Named num 664

## is_leap_year : function (year)

## jan_31 : Date[1:1], format: "2023-01-31"

## last : num 14

## last_release : spc_tbl_ [1 × 7] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## last_release_date : Date[1:1], format: "2017-06-30"

## last2 : num [1:2] 17 14

## li : num 17

## li_vec : num [1:7] 16 9 13 5 2 17 14

## lily : 'data.frame': 1 obs. of 3 variables:

## $ type : chr "Poultry"

## $ calories: int 86

## $ sodium : int 358

## linkedin : List of 7

## $ : num 16

## $ : num 9

## $ : num 13

## $ : num 5

## $ : num 2

## $ : num 17

## $ : num 14

## login : POSIXct[1:5], format: "2023-09-19 10:18:04" "2023-09-24 09:14:18" "2023-09-24 12:21:51" ...

## logout : POSIXct[1:5], format: "2023-09-19 10:56:29" "2023-09-24 09:14:52" "2023-09-24 12:35:48" ...

## logs : spc_tbl_ [100,000 × 3] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## lower : Named num -550

## mar_11 : POSIXct[1:1], format: "2017-03-11 12:00:00"

## mar_12 : POSIXct[1:1], format: "2017-03-12 12:00:00"

## mar_13 : POSIXct[1:1], format: "2017-03-13 12:00:00"

## matrix2_rowname : chr [1:3] "The Phantom Menace" "Attack of the Clones" ...

## max : num 30

## mdl : tibble [1 × 8] (S3: tbl_df/tbl/data.frame)

## medium : chr "LinkedIn"

## meteo : Named chr [1:4] "March 1, 15" "June 1, 15" "September 1, 15" ...

## meteo_dates : Date[1:4], format: "2015-03-01" "2015-06-01" "2015-09-01" "2015-12-01"

## millennium_falcon : tibble [239 × 6] (S3: tbl_df/tbl/data.frame)

## millennium_falcon_colors : tibble [19 × 2] (S3: tbl_df/tbl/data.frame)

## min : num 0

## model : List of 31

## $ coefficients : Named num [1:7] 4.0864 0.374 -0.0199 -0.5807 -0.5782 ...

## $ residuals : Named num [1:346] -0.535 -0.768 -0.944 -0.662 -0.767 ...

## $ fitted.values : Named num [1:346] 4.3 4.3 17.83 2.96 4.29 ...

## $ effects : Named num [1:346] -360 -29.2 20.3 -10 23.4 ...

## $ R : num [1:7, 1:7] -97.4 0 0 0 0 ...

## $ rank : int 7

## $ qr :List of 5

## $ family :List of 13

## $ linear.predictors: Named num [1:346] 1.46 1.46 2.88 1.09 1.46 ...

## $ deviance : num 11529

## $ aic : num 12864

## $ null.deviance : num 18850

## $ iter : int 6

## $ weights : Named num [1:346] 4.3 4.3 17.83 2.96 4.29 ...

## $ prior.weights : Named num [1:346] 1 1 1 1 1 1 1 1 1 1 ...

## $ df.residual : int 339

## $ df.null : int 345

## $ y : Named num [1:346] 2 1 1 1 1 1 80 104 55 350 ...

## $ converged : logi TRUE

## $ boundary : logi FALSE

## $ model :'data.frame': 346 obs. of 4 variables:

## $ na.action : 'omit' Named int [1:64] 1 2 3 4 5 6 7 8 9 10 ...

## $ call : language glm(formula = formula, family = poisson, data = data)

## $ formula :Class 'formula' language n_visits ~ gender + income + travel

## $ terms :Classes 'terms', 'formula' language n_visits ~ gender + income + travel

## $ data :'data.frame': 410 obs. of 4 variables:

## $ offset : NULL

## $ control :List of 3

## $ method : chr "glm.fit"

## $ contrasts :List of 3

## $ xlevels :List of 3

## moment_step : POSIXct[1:1], format: "1969-07-20 02:56:15"

## mon_2pm : POSIXct[1:1], format: "2018-08-27 14:00:00"

## monarchs : tibble [131 × 7] (S3: tbl_df/tbl/data.frame)

## month_seq : Formal class 'Period' [package "lubridate"] with 6 slots

## movie_actors : chr [1:6] "Leonardo DiCaprio" "Matt Damon" "Jack Nicholson" ...

## movie_title : chr "The Departed"

## mtcars : 'data.frame': 32 obs. of 16 variables:

## $ mpg : num 21 21 22.8 21.4 18.7 18.1 14.3 24.4 22.8 19.2 ...

## $ cyl : num 6 6 4 6 8 6 8 4 4 6 ...

## $ disp : num 160 160 108 258 360 ...

## $ hp : num 110 110 93 110 175 105 245 62 95 123 ...

## $ drat : num 3.9 3.9 3.85 3.08 3.15 2.76 3.21 3.69 3.92 3.92 ...

## $ wt : num 2.62 2.88 2.32 3.21 3.44 ...

## $ qsec : num 16.5 17 18.6 19.4 17 ...

## $ vs : num 0 0 1 1 0 1 0 1 1 1 ...

## $ am : num 1 1 1 0 0 0 0 0 0 0 ...

## $ gear : num 4 4 4 3 3 3 3 4 4 4 ...

## $ carb : num 4 4 1 1 2 1 4 2 2 4 ...

## $ fcyl : Factor w/ 3 levels "4","6","8": 2 2 1 2 3 2 3 1 1 2 ...

## $ fam : Factor w/ 2 levels "manual","automatic": 1 1 1 2 2 2 2 2 2 2 ...

## $ car : chr "Mazda RX4" "Mazda RX4 Wag" "Datsun 710" "Hornet 4 Drive" ...

## $ fcyl_fam: Factor w/ 6 levels "4:0","6:0","8:0",..: 5 5 4 2 3 2 3 1 1 2 ...

## $ fvs : Factor w/ 2 levels "V-shaped","straight": 1 1 2 2 1 2 1 2 2 2 ...

## mtcars_by_cyl : tibble [3 × 5] (S3: tbl_df/tbl/data.frame)

## mtcars_by_cyl1 : tibble [3 × 3] (S3: tbl_df/tbl/data.frame)

## mtcars_by_cyl2 : 'data.frame': 3 obs. of 3 variables:

## $ cyl : num 4 6 8

## $ n_wt: int 11 7 14

## $ prop: num 0.344 0.219 0.438

## my_blue : chr "#4ABEFF"

## my_book : Formal class 'workbook' [package "XLConnect"] with 2 slots

## my_character : chr "universe"

## my_df : 'data.frame': 10 obs. of 11 variables:

## $ mpg : num 21 21 22.8 21.4 18.7 18.1 14.3 24.4 22.8 19.2

## $ cyl : num 6 6 4 6 8 6 8 4 4 6

## $ disp: num 160 160 108 258 360 ...

## $ hp : num 110 110 93 110 175 105 245 62 95 123

## $ drat: num 3.9 3.9 3.85 3.08 3.15 2.76 3.21 3.69 3.92 3.92

## $ wt : num 2.62 2.88 2.32 3.21 3.44 ...

## $ qsec: num 16.5 17 18.6 19.4 17 ...

## $ vs : num 0 0 1 1 0 1 0 1 1 1

## $ am : num 1 1 1 0 0 0 0 0 0 0

## $ gear: num 4 4 4 3 3 3 3 4 4 4

## $ carb: num 4 4 1 1 2 1 4 2 2 4

## my_list : List of 3

## $ vec: int [1:10] 1 2 3 4 5 6 7 8 9 10

## $ mat: int [1:3, 1:3] 1 2 3 4 5 6 7 8 9

## $ df :'data.frame': 10 obs. of 11 variables:

## my_logical : logi FALSE

## my_matrix : int [1:3, 1:3] 1 2 3 4 5 6 7 8 9

## my_numeric : num 42

## my_vector : int [1:10] 1 2 3 4 5 6 7 8 9 10

## n_flips : num 10

## n_visits : num [1:410] 0 0 0 0 0 0 0 0 0 0 ...

## name : chr [1:8] "Mercury" "Venus" "Earth" "Mars" "Jupiter" "Saturn" "Uranus" ...

## names : List of 4

## $ : chr "gauss"

## $ : chr "bayes"

## $ : chr "pascal"

## $ : chr "pearson"

## names_ad : chr [1:4] "gauss" "bayes" "pascal" "pearson"

## names_an : chr [1:4] "gauss" "bayes" "pascal" "pearson"

## names_filtered : tibble [414 × 8] (S3: tbl_df/tbl/data.frame)

## names_normalized : tibble [1,924,665 × 8] (S3: tbl_df/tbl/data.frame)

## national_parks : chr [1:22] "Addo Elephant National Park" "Agulhas National Park" ...

## new_hope : num [1:2] 461 314

## new_mean : num 6000

## new_sales : 'data.frame': 36 obs. of 2 variables:

## $ sale_num: int 1 2 3 4 5 6 7 8 9 10 ...

## $ amount : num 3732 5735 5340 1180 5797 ...

## new_sd : num 2600

## non_us_all : Named num [1:6] 314 248 166 552 339 ...

## non_us_some : Named num [1:2] 314 248

## now : POSIXct[1:1], format: "2024-02-12 15:34:01"

## num : num 2500

## num_chars : int [1:6] 8 5 6 5 14 9

## num_views : num 14

## number : num 2500

## nyc : List of 3

## $ pop : num 8405837

## $ boroughs: chr [1:5] "Manhattan" "Bronx" "Brooklyn" "Queens" ...

## $ capital : logi FALSE

## nyc_ind : int 3

## nyc_val : logi FALSE

## obs : tibble [346 × 11] (S3: tbl_df/tbl/data.frame)

## oceania_1952 : tibble [2 × 6] (S3: tbl_df/tbl/data.frame)

## p_head : num 0.8

## p_wt_vs_fcyl_by_fam : List of 9

## $ data :'data.frame': 32 obs. of 13 variables:

## $ layers : list()

## $ scales :Classes 'ScalesList', 'ggproto', 'gg' <ggproto object: Class ScalesList, gg>

## add: function

## clone: function

## find: function

## get_scales: function

## has_scale: function

## input: function

## n: function

## non_position_scales: function

## scales: NULL